pacman::p_load(rgdal, spdep, tmap, sf, ClustGeo,

ggpubr, cluster, factoextra,

heatmaply, corrplot, tidyverse, GGally)Take-home Exercise 2: Regionalisation of Multivariate Water Point Attributes with Non-spatially Constrained and Spatially Constrained Clustering Methods

The Task

The specific tasks of this take-home exercise are as follows:

Using appropriate sf method, import the shapefile into R and save it in a simple feature data frame format. Note that there are three Projected Coordinate Systems of Nigeria, they are: EPSG: 26391, 26392, and 26303. We can use any one of them.

Using appropriate tidyr and dplyr methods, derive the proportion of functional and non-functional water point at LGA level (i.e. ADM2).

Combining the geospatial and aspatial data frame into simple feature data frame.

Delineating water point measures functional regions by using conventional hierarchical clustering.

Delineating water point measures functional regions by using spatially constrained clustering algorithms.

Thematic Mapping

- Plot to show the water points measures derived by using appropriate statistical graphics and choropleth mapping technique.

Analytical Mapping

- Plot functional regions delineated by using both non-spatially constrained and spatially constrained clustering algorithms.

Overview

The process of creating regions is called regionalisation. A regionalisation is a special kind of clustering where the objective is to group observations which are similar in their statistical attributes, but also in their spatial location.

In this take-home exercise, we are required to regionalise Nigeria by using, but not limited to the following measures:

Total number of functional water points

Total number of nonfunctional water points

Percentage of functional water points

Percentage of non-functional water points

Percentage of main water point technology (i.e. Hand Pump)

Percentage of usage capacity (i.e. < 1000, >=1000)

Percentage of rural water points

Installing & Loading R Packages

The R packages needed for this exercise are as follows:

Spatial data handling

- sf, rgdal and spdep

Attribute data handling

- tidyverse, especially readr, ggplot2 and dplyr

Choropleth mapping

- tmap

Multivariate data visualisation and analysis

- corrplot, ggpubr, heatmaply and GGally

Cluster analysis

cluster

ClustGeo

factoextra

In the code chunk below, p_load() of pacman package is used to install and load the following R packages into R environment.

The Data

Aspatial data

For the purpose of this exercise, data from WPdx Global Data Repositories will be used. There are two versions of the data. They are: WPdx-Basic and WPdx+. We are required to use WPdx+ data set.

Geospatial data

Nigeria Level-2 Administrative Boundary (also known as Local Government Area) polygon features GIS data will be used in this exercise. The data can be downloaded either from The Humanitarian Data Exchange portal or geoBoundaries.

Importing water point data

First, we are going to import the water point data into R environment.

wp_nga <- read_csv("rawdata/WPdx.csv") %>%

filter(`#clean_country_name` == "Nigeria")Thing to note from the code chunk above:

The original file name is called

Water_Point_Data_Exchange_-_PlusWPdx.csv, it has been rename toWPdx.csvfor easy encoding.Instead of using read.csv() of Base R to import the csv file into R, read_csv() is readr package is used. This is because during the initial data exploration, we notice that there is at least one field name with space between the field name (ie. New Georeferenced Column).

The data file contains water point data of many countries. In this study, we are interested on water point in Nigeria. Hence, filter() of dplyr is used to extract out records belonging to Nigeria only.

Next, write_rds() of readr package is used to save the extracted data table (i.e. wp_nga) into an output file in rds data format. The output file is called wpdx_nga.rds and it is saved in rawdata sub-folder, which will not be uploaded to Git.

wpdx_nga <- write_rds(wp_nga,

"rawdata/wpdx_nga.rds")wpdx_nga <- read_rds("rawdata/wpdx_nga.rds")Convert wkt data

After the data are imported into R environment, it is a good practice to review both the data structure and the data table if it is in tibble data frame format in R Studio.

wpdx_nga# A tibble: 95,008 × 70

row_id `#source` #lat_…¹ #lon_…² #repo…³ #stat…⁴ #wate…⁵ #wate…⁶ #wate…⁷

<dbl> <chr> <dbl> <dbl> <chr> <chr> <chr> <chr> <chr>

1 429068 GRID3 7.98 5.12 08/29/… Unknown <NA> <NA> Tapsta…

2 222071 Federal Minis… 6.96 3.60 08/16/… Yes Boreho… Well Mechan…

3 160612 WaterAid 6.49 7.93 12/04/… Yes Boreho… Well Hand P…

4 160669 WaterAid 6.73 7.65 12/04/… Yes Boreho… Well <NA>

5 160642 WaterAid 6.78 7.66 12/04/… Yes Boreho… Well Hand P…

6 160628 WaterAid 6.96 7.78 12/04/… Yes Boreho… Well Hand P…

7 160632 WaterAid 7.02 7.84 12/04/… Yes Boreho… Well Hand P…

8 642747 Living Water … 7.33 8.98 10/03/… Yes Boreho… Well Mechan…

9 642456 Living Water … 7.17 9.11 10/03/… Yes Boreho… Well Hand P…

10 641347 Living Water … 7.20 9.22 03/28/… Yes Boreho… Well Hand P…

# … with 94,998 more rows, 61 more variables: `#water_tech_category` <chr>,

# `#facility_type` <chr>, `#clean_country_name` <chr>, `#clean_adm1` <chr>,

# `#clean_adm2` <chr>, `#clean_adm3` <chr>, `#clean_adm4` <chr>,

# `#install_year` <dbl>, `#installer` <chr>, `#rehab_year` <lgl>,

# `#rehabilitator` <lgl>, `#management_clean` <chr>, `#status_clean` <chr>,

# `#pay` <chr>, `#fecal_coliform_presence` <chr>,

# `#fecal_coliform_value` <dbl>, `#subjective_quality` <chr>, …Notice that the newly imported tibble data frame (i.e. wpdx_nga) contains a field called New Georeferenced Column which represent spatial data in a textual format. In fact, this kind of text file is popularly known as Well Known Text in short wkt.

Two steps will be used to convert an asptial data file in wkt format into a sf data frame by using sf. First, st_as_sfc() of sf package is used to derive a new field called Geometry as shown in the code chunk below.

wpdx_nga$Geometry = st_as_sfc(wpdx_nga$`New Georeferenced Column`)If we check the wpdx_nga data frame and scroll to the last field now, we will see a new field called Geometry has been added.

Next, st_sf() will be used to convert the tibble data frame into sf data frame.

wpdx_sf <- st_sf(wpdx_nga, crs=4326) The code chunk below reveals the complete information of a feature object by using head() of Base R.

head(wpdx_sf, n=5)Simple feature collection with 5 features and 70 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 3.597668 ymin: 6.48694 xmax: 7.92972 ymax: 7.98

Geodetic CRS: WGS 84

# A tibble: 5 × 71

row_id #sour…¹ #lat_…² #lon_…³ #repo…⁴ #stat…⁵ #wate…⁶ #wate…⁷ #wate…⁸ #wate…⁹

<dbl> <chr> <dbl> <dbl> <chr> <chr> <chr> <chr> <chr> <chr>

1 429068 GRID3 7.98 5.12 08/29/… Unknown <NA> <NA> Tapsta… Tapsta…

2 222071 Federa… 6.96 3.60 08/16/… Yes Boreho… Well Mechan… Mechan…

3 160612 WaterA… 6.49 7.93 12/04/… Yes Boreho… Well Hand P… Hand P…

4 160669 WaterA… 6.73 7.65 12/04/… Yes Boreho… Well <NA> <NA>

5 160642 WaterA… 6.78 7.66 12/04/… Yes Boreho… Well Hand P… Hand P…

# … with 61 more variables: `#facility_type` <chr>,

# `#clean_country_name` <chr>, `#clean_adm1` <chr>, `#clean_adm2` <chr>,

# `#clean_adm3` <chr>, `#clean_adm4` <chr>, `#install_year` <dbl>,

# `#installer` <chr>, `#rehab_year` <lgl>, `#rehabilitator` <lgl>,

# `#management_clean` <chr>, `#status_clean` <chr>, `#pay` <chr>,

# `#fecal_coliform_presence` <chr>, `#fecal_coliform_value` <dbl>,

# `#subjective_quality` <chr>, `#activity_id` <chr>, `#scheme_id` <chr>, …Importing Nigeria LGA level boundary data

For the purpose of this exercise, shapefile downloaded from geoBoundaries portal will be used.

nga <- st_read(dsn = "data",

layer = "geoBoundaries-NGA-ADM2",

crs = 4326) %>%

select(shapeName)Reading layer `geoBoundaries-NGA-ADM2' from data source

`C:\Jacobche\ISSS624\Take-home_Ex\data' using driver `ESRI Shapefile'

Simple feature collection with 774 features and 5 fields

Geometry type: MULTIPOLYGON

Dimension: XY

Bounding box: xmin: 2.668534 ymin: 4.273007 xmax: 14.67882 ymax: 13.89442

Geodetic CRS: WGS 84Correcting duplicated LGAs

The code chunk below (as suggested by Jordan) uses duplicated() of base R which has managed to identify 12 LGAs (ie shapeName) having duplicates.

nga <- (nga[order(nga$shapeName), ])

nga_dup <- nga$shapeName[nga$shapeName %in% nga$shapeName[duplicated(nga$shapeName)] ]

nga_dup [1] "Bassa" "Bassa" "Ifelodun" "Ifelodun" "Irepodun" "Irepodun"

[7] "Nasarawa" "Nasarawa" "Obi" "Obi" "Surulere" "Surulere"After some research, the corrected index and shapeName should be as follows:

| Index | shapeName |

|---|---|

| 94 | Bassa Kogi |

| 95 | Bassa Plateau |

| 304 | Ifelodun Kwara |

| 305 | Ifelodun Osun |

| 355 | Irepodun Kwara |

| 356 | Irepodun Osun |

| 519 | Nasarawa Kano |

| 520 | Nasarawa |

| 546 | Obi Benue |

| 547 | Obi Nasarawa |

| 693 | Surulere Lagos |

| 694 | Surulere Oyo |

The code chunk below is used to correct the respective shapeName values.

nga$shapeName[c(94,95,304,305,355,356,519,520,546,547,693,694)] <-

c("Bassa Kogi","Bassa Plateau","Ifelodun Kwara","Ifelodun Osun",

"Irepodun Kwara","Irepodun Osun","Nassarawa Kano","Nassarawa",

"Obi Benue","Obi Nasarawa","Surulere Lagos","Surulere Oyo")The code chunk below uses length() of base R to validate that there are no more duplicates in LGAs.

length((nga$shapeName[ nga$shapeName %in% nga$shapeName[duplicated(nga$shapeName)] ]))[1] 0Point in Polygon Overlay

Although wpdx_sf sf data frame consists of a field called #clean_adm2 which by right should provide the LGA names of the water points located. However, it is always a good practice to be more cautious when dealing with data accuracy.

In this section, we are going to use a geoprocessing function (or commonly know as GIS analysis) called point-in-polygon overlay to transfer the attribute information in nga sf data frame into wpdx_sf data frame. The code chunk below uses st_join() of sf package to perform a join and a new field called shapeName is now added to wpdx_sf sf data frame.

wpdx_sf <- st_join(wpdx_sf, nga)Data Wrangling

Recoding NA values into string

In the code chunk below, replace_na() is used to recode all the NA values in #status_clean and #water_tech_category fields into Unknown.

wpdx_sf <- wpdx_sf %>%

mutate(`#status_clean` = replace_na(`#status_clean`, "Unknown")) %>%

mutate(`#water_tech_category` = replace_na(`#water_tech_category`, "Unknown"))Extracting useful data points

In the code chunk below, filter() of dplyr is used to select water points of various #status_clean.

func_wp <- wpdx_sf %>%

filter(`#status_clean` %in%

c("Functional",

"Functional but not in use",

"Functional but needs repair"))nonfunc_wp <- wpdx_sf %>%

filter(`#status_clean` %in%

c("Abandoned/Decommissioned",

"Abandoned",

"Non-Functional",

"Non functional due to dry season",

"Non-Functional due to dry season"))unknown_wp <- wpdx_sf %>%

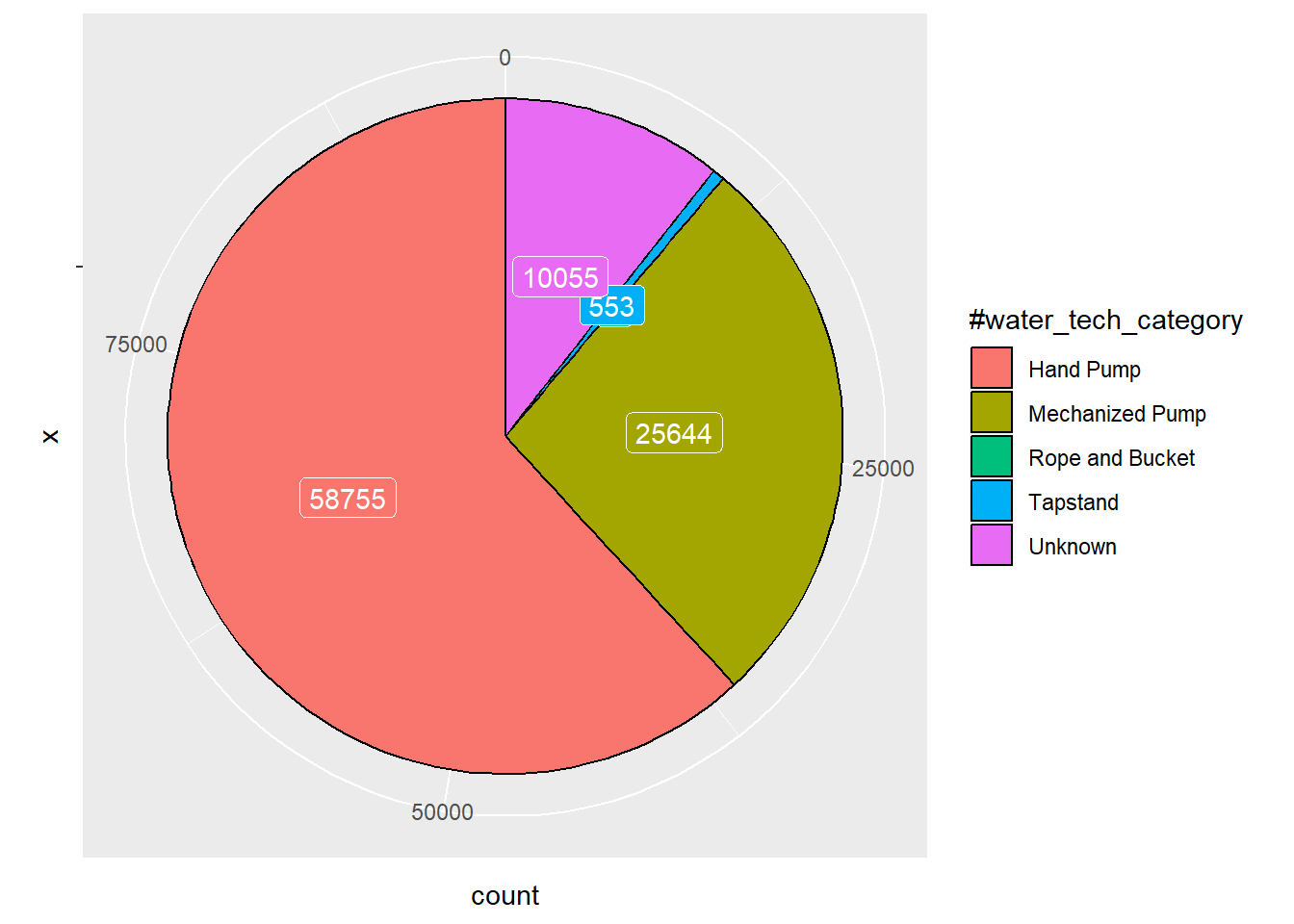

filter(`#status_clean` == "Unknown")In the code chunk below, we will check the proportions in #water-tech-category and then use filter() of dplyr to select only the water points of sizable proportions.

data_1 <- wpdx_sf %>%

group_by(`#water_tech_category`) %>%

summarise(count = n())

ggplot(data_1, aes(x = "", y = count, fill = `#water_tech_category`)) +

geom_col(color = "black") +

geom_label(aes(label = count),

color = "white",

position = position_stack(vjust = 0.5),

show.legend = FALSE) +

coord_polar(theta = "y")

handpump_wp <- wpdx_sf %>%

filter(`#water_tech_category` == "Hand Pump")

mechanized_wp <- wpdx_sf %>%

filter(`#water_tech_category` == "Mechanized Pump")In the code chunk below, filter() of dplyr is used to select water points of usage_capacity >=1000 and those <1000.

highusage_wp <- wpdx_sf %>%

filter(usage_capacity >= 1000)

lowusage_wp <- wpdx_sf %>%

filter(usage_capacity < 1000)In the code chunk below, filter() of dplyr is used to select water points of is_urban = False and those = True.

rural_wp <- wpdx_sf %>%

filter(is_urban == FALSE)

urban_wp <- wpdx_sf %>%

filter(is_urban == TRUE)Performing Point-in-Polygon Count

The code chunk below performs two operations at one go. Firstly, identify water points located inside each LGA by using st_intersects(). Next, length() of Base R is used to calculate numbers of water points that fall inside each LGA.

nga_wp <- nga %>%

mutate(total_wp = lengths(

st_intersects(nga, wpdx_sf))) %>%

mutate(func_wp = lengths(

st_intersects(nga, func_wp))) %>%

mutate(nonfunc_wp = lengths(

st_intersects(nga, nonfunc_wp))) %>%

mutate(unknown_wp = lengths(

st_intersects(nga, unknown_wp))) %>%

mutate(handpump_wp = lengths(

st_intersects(nga, handpump_wp))) %>%

mutate(mechanized_wp = lengths(

st_intersects(nga, mechanized_wp))) %>%

mutate(highusage_wp = lengths(

st_intersects(nga, highusage_wp))) %>%

mutate(lowusage_wp = lengths(

st_intersects(nga, lowusage_wp))) %>%

mutate(rural_wp = lengths(

st_intersects(nga, rural_wp))) %>%

mutate(urban_wp = lengths(

st_intersects(nga, urban_wp)))The code chunk below reveal the summary statistics of nga_wp data frame.

summary(nga_wp) shapeName geometry total_wp func_wp

Length:774 MULTIPOLYGON :774 Min. : 0.0 Min. : 0.00

Class :character epsg:4326 : 0 1st Qu.: 45.0 1st Qu.: 17.00

Mode :character +proj=long...: 0 Median : 96.0 Median : 45.50

Mean :122.7 Mean : 67.36

3rd Qu.:168.8 3rd Qu.: 87.75

Max. :894.0 Max. :752.00

nonfunc_wp unknown_wp handpump_wp mechanized_wp

Min. : 0.00 Min. : 0.00 Min. : 0.00 Min. : 0.00

1st Qu.: 12.25 1st Qu.: 0.00 1st Qu.: 6.00 1st Qu.: 11.00

Median : 34.00 Median : 0.00 Median : 47.00 Median : 25.50

Mean : 41.60 Mean : 13.76 Mean : 75.89 Mean : 33.12

3rd Qu.: 60.75 3rd Qu.: 17.75 3rd Qu.:111.00 3rd Qu.: 46.00

Max. :278.00 Max. :219.00 Max. :764.00 Max. :245.00

highusage_wp lowusage_wp rural_wp urban_wp

Min. : 0.00 Min. : 0.00 Min. : 0.00 Min. : 0.00

1st Qu.: 11.00 1st Qu.: 16.00 1st Qu.: 23.00 1st Qu.: 0.00

Median : 25.50 Median : 60.00 Median : 64.00 Median : 9.00

Mean : 33.12 Mean : 89.59 Mean : 97.45 Mean : 25.27

3rd Qu.: 46.00 3rd Qu.:127.75 3rd Qu.:141.00 3rd Qu.: 33.00

Max. :245.00 Max. :767.00 Max. :894.00 Max. :324.00 Derive new variables using dplyr package

The unit of measurement of the values are number of water points. Using these values directly will be biased towards the underlying total number of water points. In order to overcome this problem, we will derive the percentages of each variable by using the code chunk below.

nga_wp_derived <- nga_wp %>%

mutate(func_perc = func_wp/total_wp) %>%

mutate(nonfunc_perc = nonfunc_wp/total_wp) %>%

mutate(handpump_perc = handpump_wp/total_wp) %>%

mutate(mechanized_perc = mechanized_wp/total_wp) %>%

mutate(highusage_perc = highusage_wp/total_wp) %>%

mutate(lowusage_perc = lowusage_wp/total_wp) %>%

mutate(rural_perc = rural_wp/total_wp) %>%

mutate(urban_perc = urban_wp/total_wp) %>%

select(1:2, 4:5, 13:20)We notice there are some NA values in the nga_wp_derived data frame. As such, is.na() of base R is used to replace NA with 0.

nga_wp_derived[is.na(nga_wp_derived)] = 0Let us review the summary statistics of the newly derived variables using the code chunk below.

summary(nga_wp_derived) shapeName func_wp nonfunc_wp func_perc

Length:774 Min. : 0.00 Min. : 0.00 Min. :0.0000

Class :character 1st Qu.: 17.00 1st Qu.: 12.25 1st Qu.:0.3261

Mode :character Median : 45.50 Median : 34.00 Median :0.4741

Mean : 67.36 Mean : 41.60 Mean :0.4984

3rd Qu.: 87.75 3rd Qu.: 60.75 3rd Qu.:0.6699

Max. :752.00 Max. :278.00 Max. :1.0000

nonfunc_perc handpump_perc mechanized_perc highusage_perc

Min. :0.0000 Min. :0.0000 Min. :0.0000 Min. :0.0000

1st Qu.:0.2105 1st Qu.:0.1670 1st Qu.:0.1220 1st Qu.:0.1220

Median :0.3505 Median :0.5099 Median :0.3127 Median :0.3127

Mean :0.3592 Mean :0.4873 Mean :0.3754 Mean :0.3754

3rd Qu.:0.5076 3rd Qu.:0.7778 3rd Qu.:0.5771 3rd Qu.:0.5771

Max. :1.0000 Max. :1.0000 Max. :1.0000 Max. :1.0000

lowusage_perc rural_perc urban_perc geometry

Min. :0.0000 Min. :0.0000 Min. :0.0000 MULTIPOLYGON :774

1st Qu.:0.3968 1st Qu.:0.5727 1st Qu.:0.0000 epsg:4326 : 0

Median :0.6703 Median :0.8645 Median :0.1195 +proj=long...: 0

Mean :0.6078 Mean :0.7271 Mean :0.2561

3rd Qu.:0.8735 3rd Qu.:1.0000 3rd Qu.:0.3844

Max. :1.0000 Max. :1.0000 Max. :1.0000 Exploratory Data Analysis (EDA)

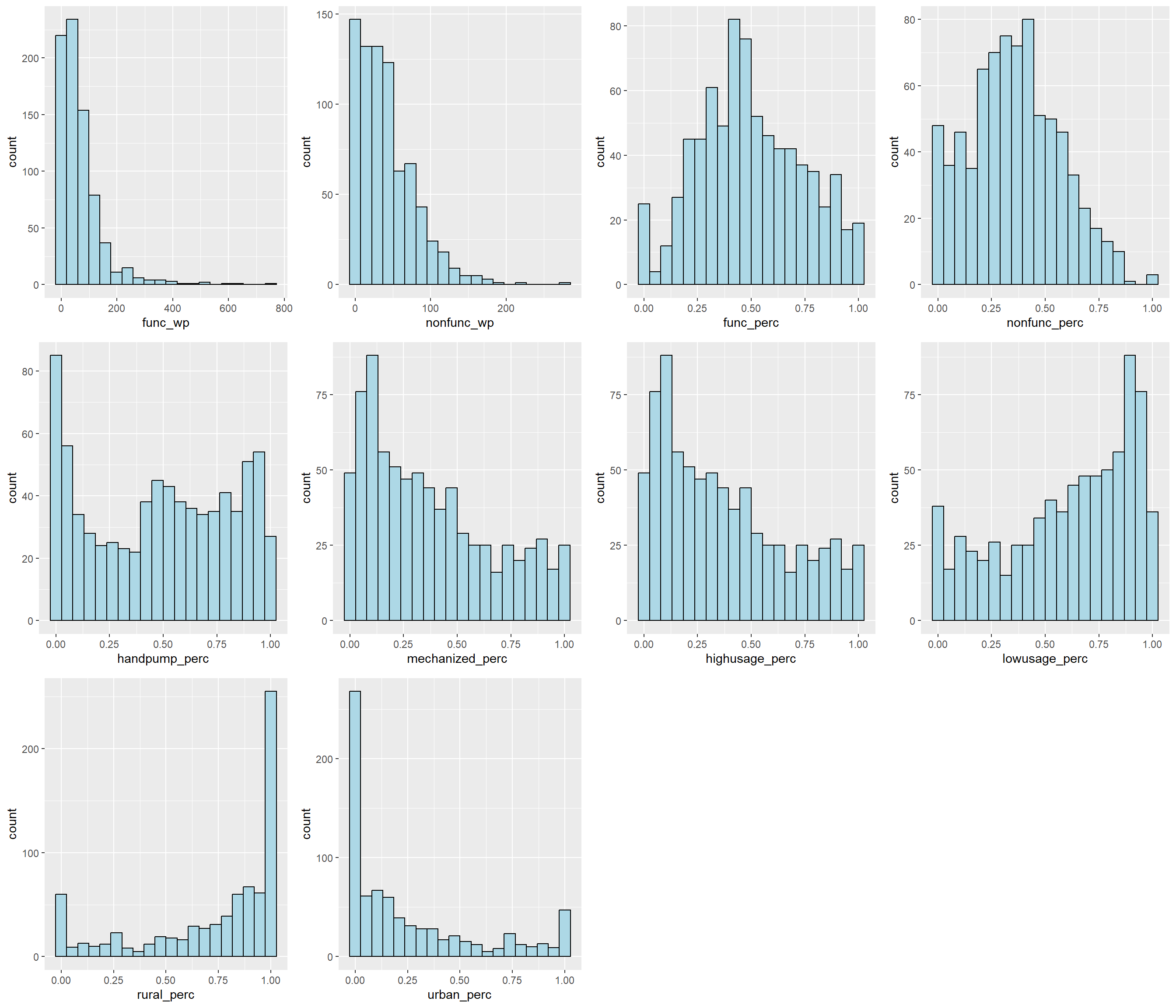

EDA using statistical graphics

The code below uses ggarange() function of ggpubr package to compute multiple histograms so as to reveal the distribution of the selected variables in the nga_wp_derived data frame.

func_wp <- ggplot(data=nga_wp_derived,

aes(x= func_wp)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

nonfunc_wp <- ggplot(data=nga_wp_derived,

aes(x= nonfunc_wp)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

func_perc <- ggplot(data=nga_wp_derived,

aes(x= func_perc)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

nonfunc_perc <- ggplot(data=nga_wp_derived,

aes(x= nonfunc_perc)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

handpump_perc <- ggplot(data=nga_wp_derived,

aes(x= handpump_perc)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

mechanized_perc <- ggplot(data=nga_wp_derived,

aes(x= mechanized_perc)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

highusage_perc <- ggplot(data=nga_wp_derived,

aes(x= highusage_perc)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

lowusage_perc <- ggplot(data=nga_wp_derived,

aes(x= lowusage_perc)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

rural_perc <- ggplot(data=nga_wp_derived,

aes(x= rural_perc)) +

geom_histogram(bins=20,

color="black",

fill="light blue")

urban_perc <- ggplot(data=nga_wp_derived,

aes(x= urban_perc)) +

geom_histogram(bins=20,

color="black",

fill="light blue")ggarrange(func_wp, nonfunc_wp, func_perc, nonfunc_perc, handpump_perc,

mechanized_perc, highusage_perc, lowusage_perc, rural_perc, urban_perc,

ncol = 4,

nrow = 3)

From the above, we can observe that func_perc and nonfunc_perc are more normally distributed compared to other variables.

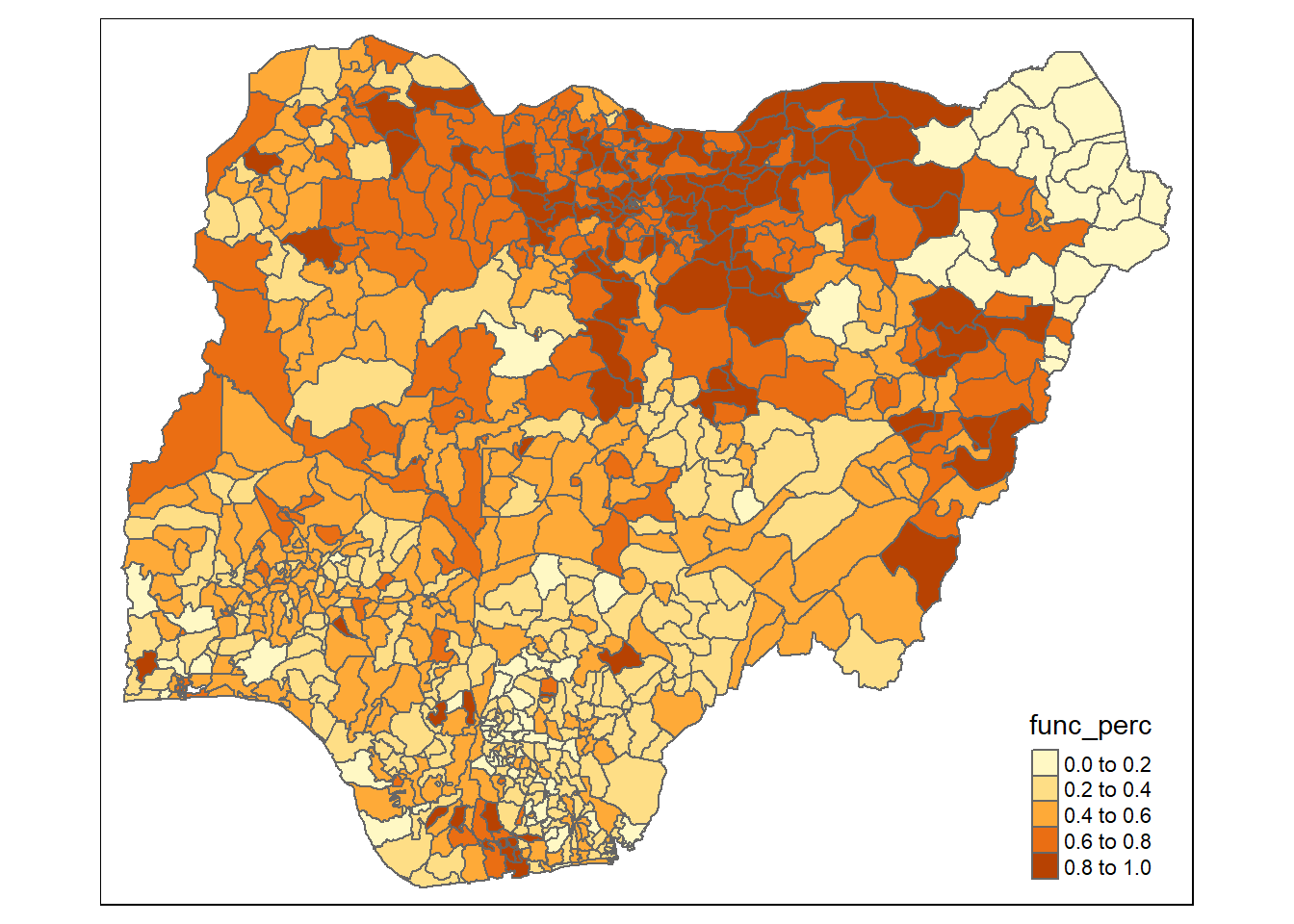

EDA using choropleth map

To have a quick look at the distribution of functional water points percentage of Nigeria at LGA level, a choropleth map will be prepared.

The code chunk below is used to prepare the choropleth by using the qtm() function of tmap package.

qtm(nga_wp_derived, "func_perc")

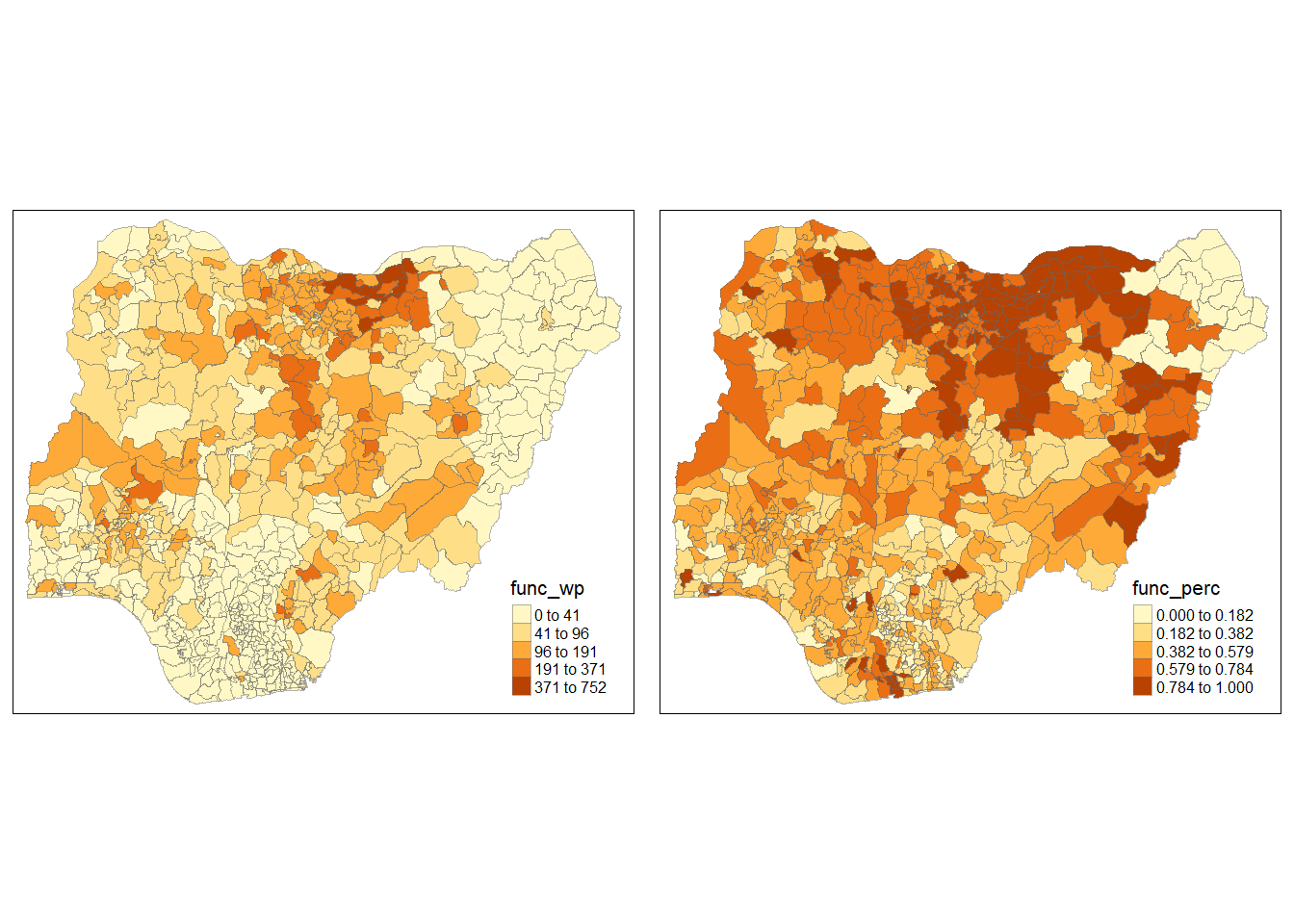

In order to reveal the distribution shown in the choropleth map above are biased towards the underlying number of functional water points at the LGAs, we will create two choropleth maps, one for the number of functional water points (i.e. func.map) and one for the percentage of functional water points (func_perc.map) by using the code chunk below.

func.map <- tm_shape(nga_wp_derived) +

tm_fill(col = "func_wp",

n = 5,

style = "jenks",

title = "func_wp") +

tm_borders(alpha = 0.5) +

tm_layout(scale = 0.7)

func_perc.map <- tm_shape(nga_wp_derived) +

tm_fill(col = "func_perc",

n = 5,

style = "jenks",

title = "func_perc ") +

tm_borders(alpha = 0.5) +

tm_layout(scale = 0.7)

tmap_arrange(func.map, func_perc.map,

asp=NA, ncol=2)

Notice that there are areas with high proportions of functional water points but the actual number of functional water points are low.

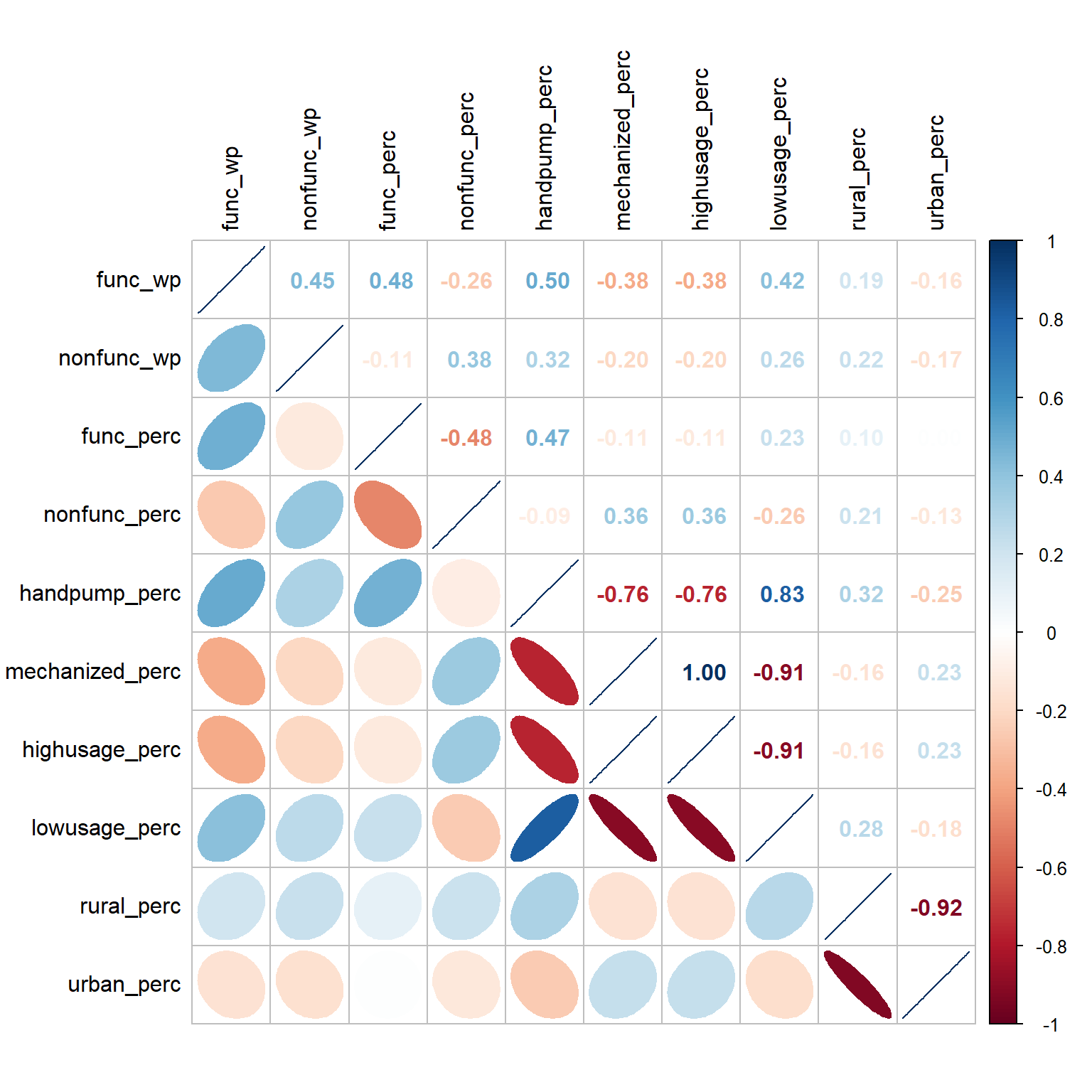

Correlation Analysis

Before we perform cluster analysis, it is important for us to ensure that the cluster variables are not highly correlated. The code below uses corrplot.mixed() function of corrplot package to visualise and analyse the correlation of the input variables.

nga_wp_derived_new <- nga_wp_derived %>%

st_set_geometry(NULL)

cluster_vars.cor = cor(nga_wp_derived_new[, 2:11])

corrplot.mixed(cluster_vars.cor,

lower = "ellipse",

upper = "number",

tl.pos = "lt",

diag = "l",

tl.col = "black")

The correlation plot above shows that many pairs of variables are correlated, both in positive and negative sense. We will keep only one of them in each pair for the cluster analysis later.

Conventional Hierarchy Cluster Analysis

Extracting clustering variables

The code chunk below will be used to extract the clustering variables from nga_wp_derived_new.

cluster_vars <- nga_wp_derived_new %>%

select("shapeName", "func_wp", "nonfunc_wp","func_perc", "nonfunc_perc", "handpump_perc", "rural_perc")

head(cluster_vars, 10) shapeName func_wp nonfunc_wp func_perc nonfunc_perc handpump_perc

1 Aba North 7 9 0.4117647 0.5294118 0.11764706

2 Aba South 29 35 0.4084507 0.4929577 0.09859155

3 Abadam 0 0 0.0000000 0.0000000 0.00000000

4 Abaji 23 34 0.4035088 0.5964912 0.40350877

5 Abak 23 25 0.4791667 0.5208333 0.08333333

6 Abakaliki 82 42 0.3519313 0.1802575 0.43776824

7 Abeokuta North 16 15 0.4705882 0.4411765 0.14705882

8 Abeokuta South 72 33 0.6050420 0.2773109 0.16806723

9 Abi 79 62 0.5197368 0.4078947 0.59868421

10 Aboh-Mbaise 18 26 0.2727273 0.3939394 0.01515152

rural_perc

1 0.00000000

2 0.05633803

3 0.00000000

4 0.84210526

5 0.83333333

6 0.87553648

7 0.20588235

8 0.00000000

9 0.95394737

10 0.72727273Notice that the final clustering variables list does not include variable highusage_perc ,lowusage_perc , mechanized_perc and urban_perc due to the high correlation spotted.

Next, we need to change the rows by LGA name instead of row number by using the code chunk below.

row.names(cluster_vars) <- cluster_vars$"shapeName"

nga_wp_var <- select(cluster_vars, c(2:7))

head(nga_wp_var, 10) func_wp nonfunc_wp func_perc nonfunc_perc handpump_perc

Aba North 7 9 0.4117647 0.5294118 0.11764706

Aba South 29 35 0.4084507 0.4929577 0.09859155

Abadam 0 0 0.0000000 0.0000000 0.00000000

Abaji 23 34 0.4035088 0.5964912 0.40350877

Abak 23 25 0.4791667 0.5208333 0.08333333

Abakaliki 82 42 0.3519313 0.1802575 0.43776824

Abeokuta North 16 15 0.4705882 0.4411765 0.14705882

Abeokuta South 72 33 0.6050420 0.2773109 0.16806723

Abi 79 62 0.5197368 0.4078947 0.59868421

Aboh-Mbaise 18 26 0.2727273 0.3939394 0.01515152

rural_perc

Aba North 0.00000000

Aba South 0.05633803

Abadam 0.00000000

Abaji 0.84210526

Abak 0.83333333

Abakaliki 0.87553648

Abeokuta North 0.20588235

Abeokuta South 0.00000000

Abi 0.95394737

Aboh-Mbaise 0.72727273Data Standardisation

In order to avoid cluster analysis result being biased towards clustering variables with large values, it is useful to standardise the input variables before performing cluster analysis.

The code chunk below uses normalize() of heatmaply package to standardise the clustering variables.

nga_wp_var.std <- normalize(nga_wp_var)

summary(nga_wp_var.std) func_wp nonfunc_wp func_perc nonfunc_perc

Min. :0.00000 Min. :0.00000 Min. :0.0000 Min. :0.0000

1st Qu.:0.02261 1st Qu.:0.04406 1st Qu.:0.3261 1st Qu.:0.2105

Median :0.06051 Median :0.12230 Median :0.4741 Median :0.3505

Mean :0.08957 Mean :0.14962 Mean :0.4984 Mean :0.3592

3rd Qu.:0.11669 3rd Qu.:0.21853 3rd Qu.:0.6699 3rd Qu.:0.5076

Max. :1.00000 Max. :1.00000 Max. :1.0000 Max. :1.0000

handpump_perc rural_perc

Min. :0.0000 Min. :0.0000

1st Qu.:0.1670 1st Qu.:0.5727

Median :0.5099 Median :0.8645

Mean :0.4873 Mean :0.7271

3rd Qu.:0.7778 3rd Qu.:1.0000

Max. :1.0000 Max. :1.0000 Note that the values range of the standardised clustering variables are 0-1 now.

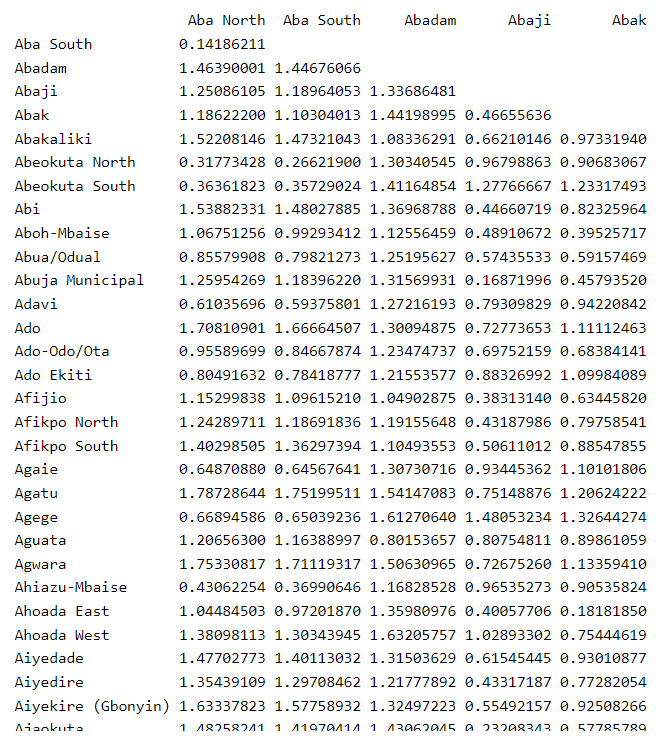

Computing proximity matrix

The code chunk below is used to compute the proximity matrix using euclidean method.

proxmat <- dist(nga_wp_var.std, method = 'euclidean')The code chunk below can then be used to list the content of proxmat for visual inspection.

proxmat

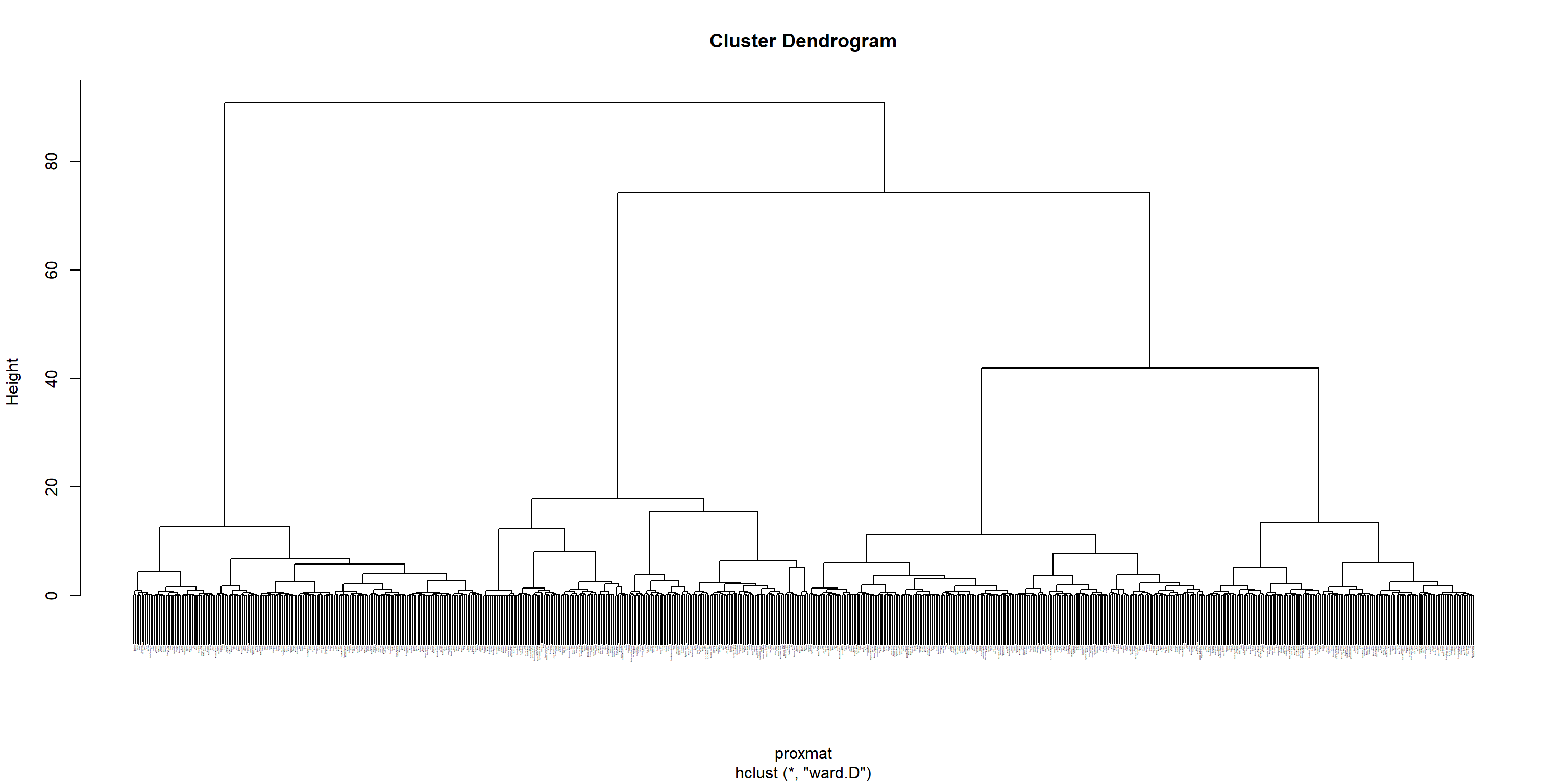

Computing hierarchical clustering

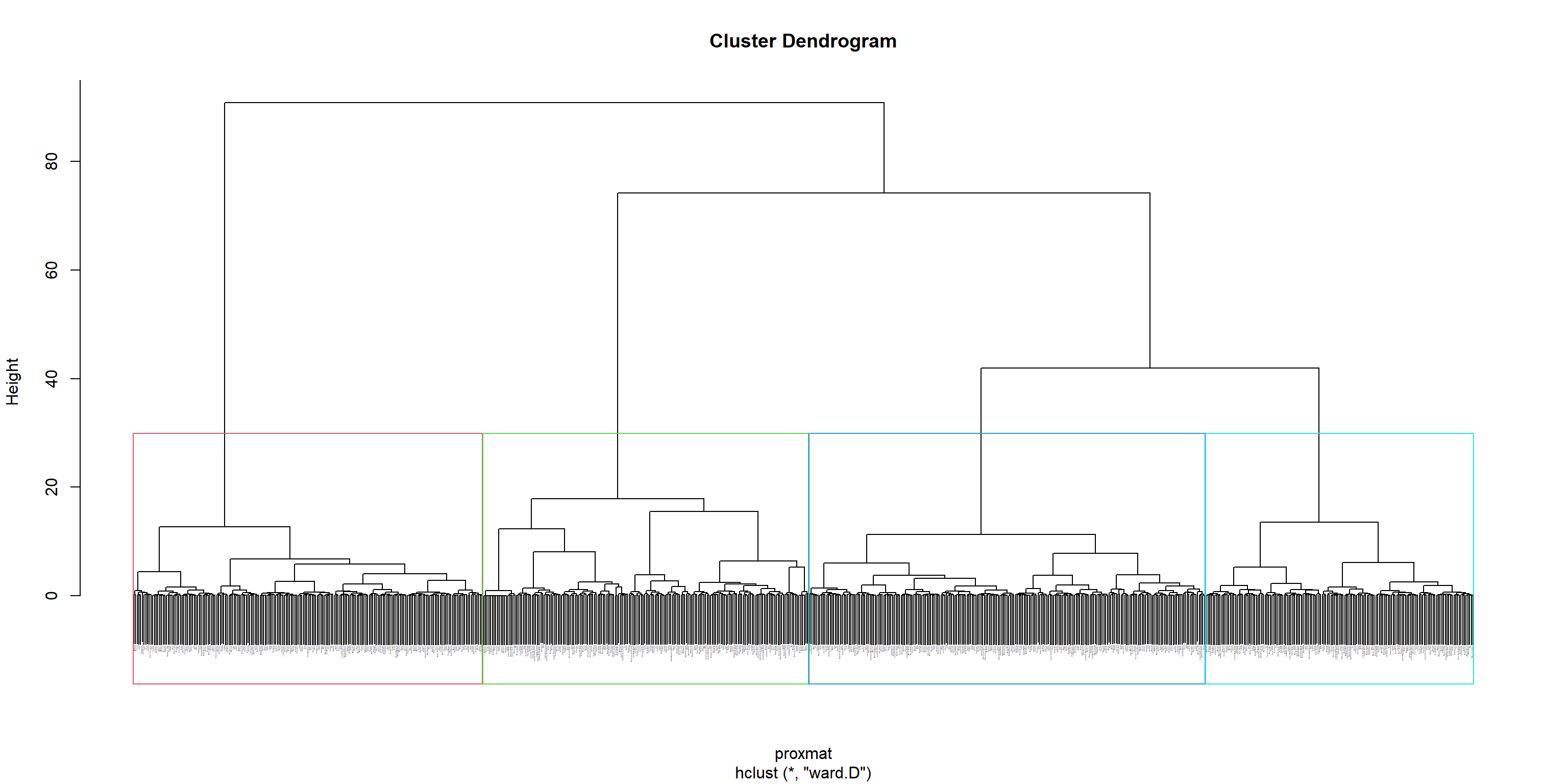

The code chunk below uses hclust() of R stats to performs hierarchical cluster analysis via the ward.D method. The hierarchical clustering output is stored in an object of class hclust which describes the tree produced by the clustering process.

hclust_ward <- hclust(proxmat, method = 'ward.D')We can then plot the tree by using plot() of R Graphics as shown in the code chunk below.

plot(hclust_ward, cex = 0.1)

Selecting the optimal clustering algorithm

The code chunk below will be used to compute the agglomerative coefficients of all hierarchical clustering algorithms.

m <- c( "average", "single", "complete", "ward")

names(m) <- c( "average", "single", "complete", "ward")

ac <- function(x) {

agnes(nga_wp_var.std, method = x)$ac

}

map_dbl(m, ac) average single complete ward

0.9114067 0.7819154 0.9440637 0.9902760 With reference to the output above, we can see that Ward’s method provides the strongest clustering structure among the four methods assessed. Hence, in the subsequent analysis, only Ward’s method will be used.

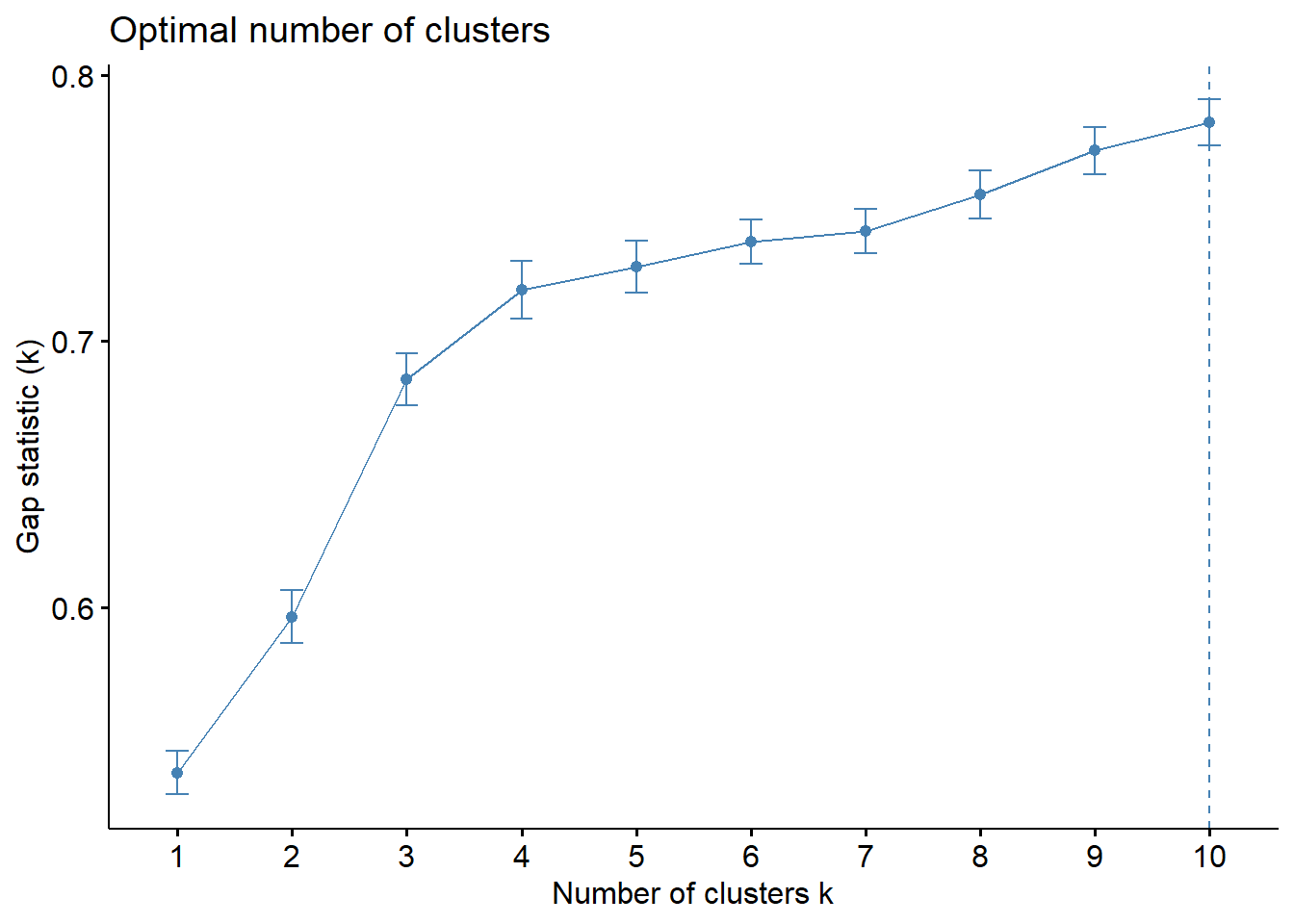

Determining Optimal Clusters

We will use the Gap Statistic Method to determine the optimal clusters to retain. To compute the gap statistic, clusGap() of cluster package will be used.

set.seed(12345)

gap_stat <- clusGap(nga_wp_var.std,

FUN = hcut,

nstart = 25,

K.max = 10,

B = 50)

# Print the result

print(gap_stat, method = "firstmax")Clustering Gap statistic ["clusGap"] from call:

clusGap(x = nga_wp_var.std, FUNcluster = hcut, K.max = 10, B = 50, nstart = 25)

B=50 simulated reference sets, k = 1..10; spaceH0="scaledPCA"

--> Number of clusters (method 'firstmax'): 10

logW E.logW gap SE.sim

[1,] 5.000512 5.538387 0.5378754 0.008086882

[2,] 4.838605 5.435003 0.5963981 0.009965797

[3,] 4.675903 5.361644 0.6857413 0.009706062

[4,] 4.585719 5.304982 0.7192630 0.010856810

[5,] 4.533596 5.261506 0.7279098 0.009875097

[6,] 4.487042 5.224385 0.7373430 0.008415453

[7,] 4.452088 5.193322 0.7412337 0.008279306

[8,] 4.411099 5.166137 0.7550376 0.008932377

[9,] 4.372379 5.143994 0.7716147 0.008912226

[10,] 4.342018 5.124133 0.7821150 0.008547773Next, we can visualise the plot by using fviz_gap_stat() of factoextra package.

fviz_gap_stat(gap_stat)

From the output above, the optimal number of clusters is 4.

Interpreting the dendrograms

The code below uses rect.hclust() of R stats to add border colors for the rectangles.

plot(hclust_ward, cex = 0.1)

rect.hclust(hclust_ward,

k = 4,

border = 2:5)

Visually-driven hierarchical clustering analysis

The code chunk below uses the heatmaply() of heatmaply package to build an interactive cluster heatmap.

nga_wp_var_mat <- data.matrix(nga_wp_var.std)heatmaply(normalize(nga_wp_var_mat),

Colv=NA,

dist_method = "euclidean",

hclust_method = "ward.D",

seriate = "OLO",

colors = Blues,

k_row = 4,

margins = c(NA,200,60,NA),

fontsize_row = 4,

fontsize_col = 5,

main="Geographic Segmentation of LGAs by Cluster Variables",

xlab = "Cluster Variables",

ylab = "LGAs of Nigeria"

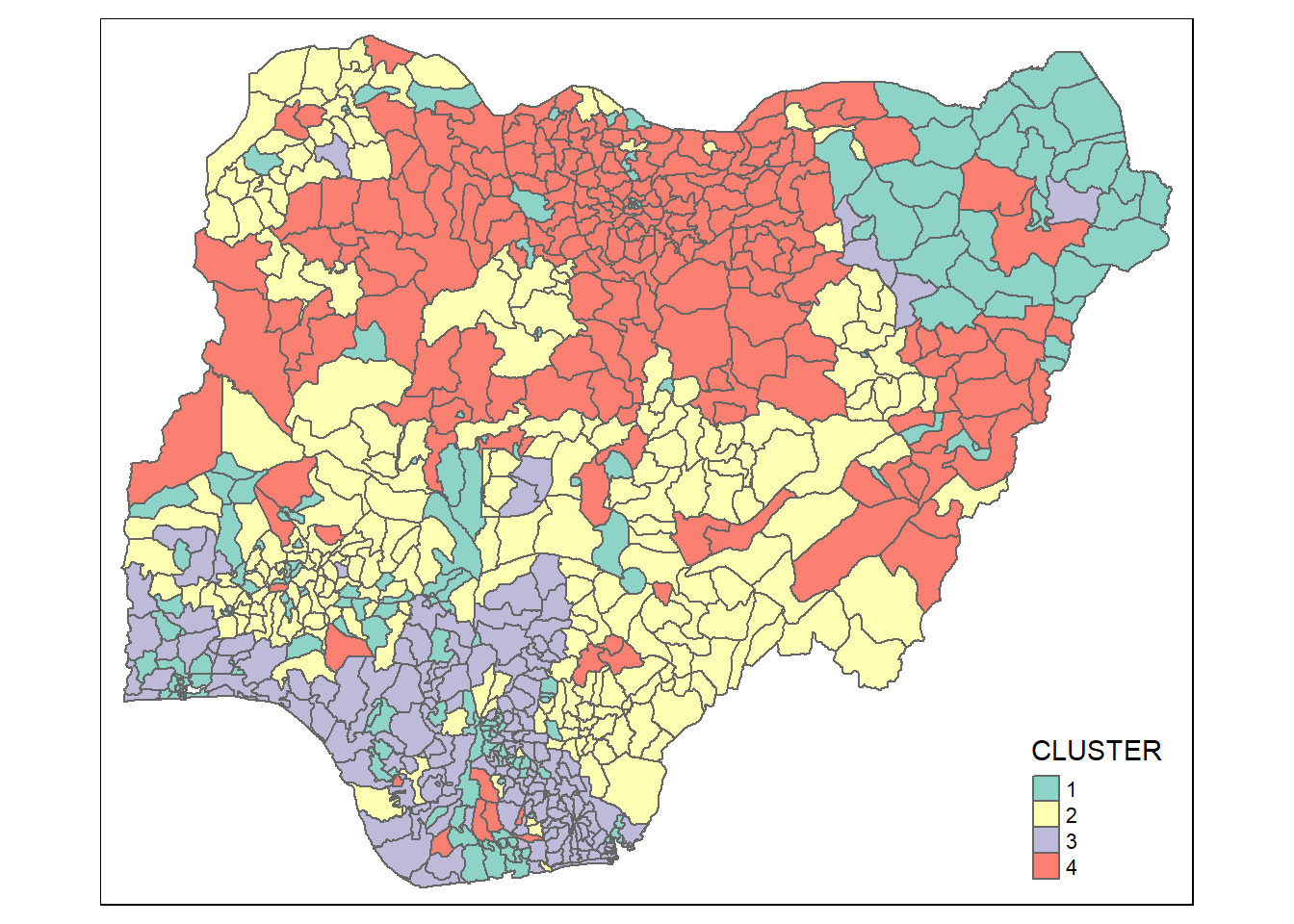

)Mapping the clusters formed

In order to visualise the clusters formed, the code chunk below uses qtm() of tmap package to plot the choropleth map.

groups <- as.factor(cutree(hclust_ward, k=4))nga_wp_cluster <- cbind(nga, as.matrix(groups)) %>%

rename(`CLUSTER`=`as.matrix.groups.`)qtm(nga_wp_cluster, "CLUSTER")

The choropleth map above reveals that the clusters are very fragmented. The is one of the major limitations when non-spatial clustering algorithm such as hierarchical cluster analysis method is used.

Spatially Constrained Clustering: SKATER approach

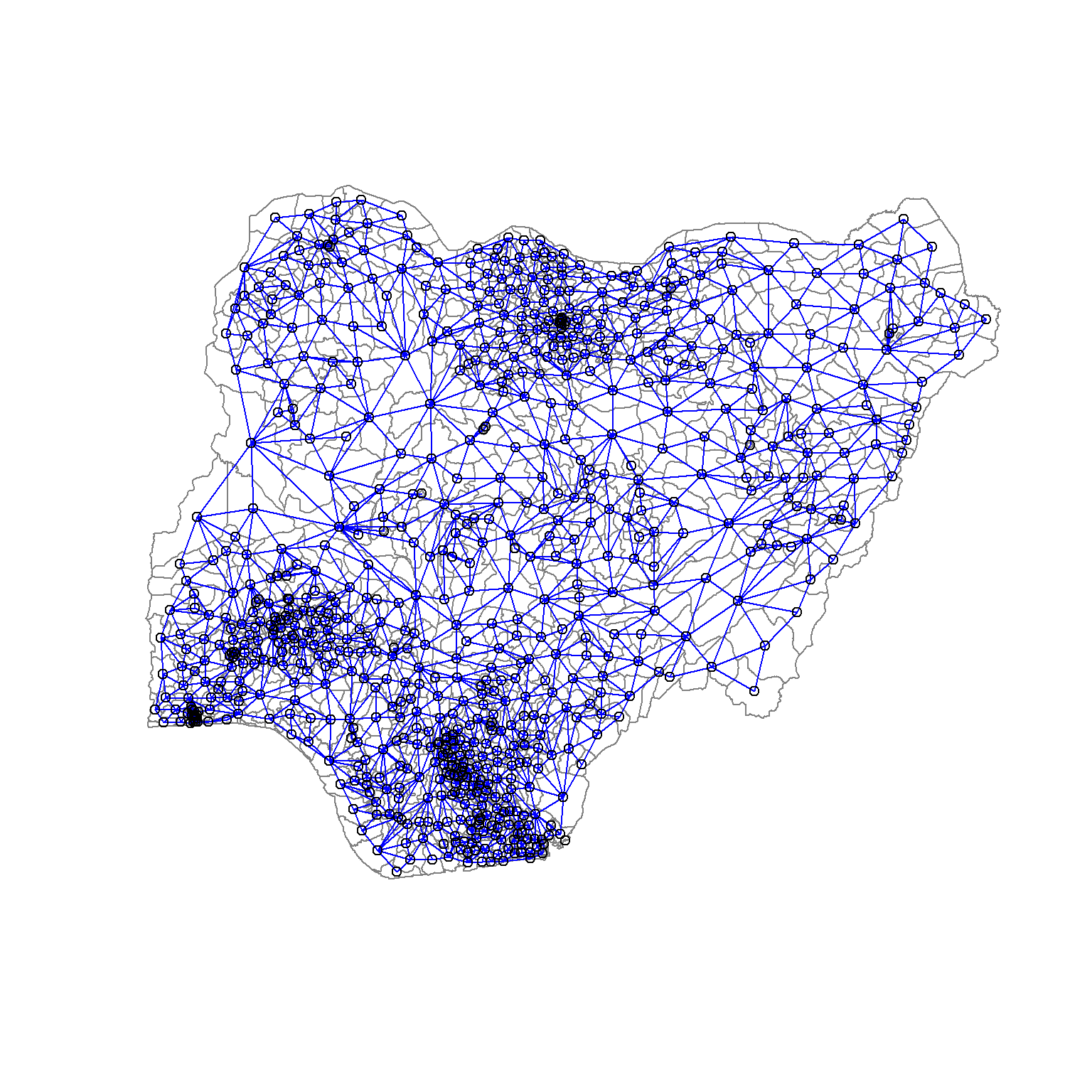

Computing Neighbour List

The code chunk below uses poly2nd() of spdep package to compute the neighbours list from polygon list.

nga_wp_sp <- as_Spatial(nga_wp_derived)nga_wp.nb <- poly2nb(nga_wp_sp)

summary(nga_wp.nb)Neighbour list object:

Number of regions: 774

Number of nonzero links: 4440

Percentage nonzero weights: 0.7411414

Average number of links: 5.736434

1 region with no links:

86

Link number distribution:

0 1 2 3 4 5 6 7 8 9 10 11 12 14

1 2 14 57 125 182 140 122 72 41 12 4 1 1

2 least connected regions:

138 560 with 1 link

1 most connected region:

508 with 14 linksplot(nga_wp_sp,

border=grey(.5))

plot(nga_wp.nb,

coordinates(nga_wp_sp),

col="blue",

add=TRUE)

Computing minimum spanning tree

Recomputing Neighbour List

The neighbours list is recomputed as we remove the LGA with no link.

nga_wp_v2 <- nga_wp_derived[c(1:85, 87:774),]

nga_wp_sp_v2 <- as_Spatial(nga_wp_v2)

nga_wp_var_v2 <- nga_wp_var.std[c(1:85, 87:774),]nga_wp.nb <- poly2nb(nga_wp_sp_v2)

summary(nga_wp.nb)Neighbour list object:

Number of regions: 773

Number of nonzero links: 4440

Percentage nonzero weights: 0.7430602

Average number of links: 5.743855

Link number distribution:

1 2 3 4 5 6 7 8 9 10 11 12 14

2 14 57 125 182 140 122 72 41 12 4 1 1

2 least connected regions:

138 560 with 1 link

1 most connected region:

508 with 14 linksCalculating edge costs

The code chunk below uses nbcosts() of spdep package to compute the cost of each edge, which is the distance between nodes.

lcosts <- nbcosts(nga_wp.nb, nga_wp_var_v2)nga_wp.w <- nb2listw(nga_wp.nb,

lcosts,

style="B")

summary(nga_wp.w)Characteristics of weights list object:

Neighbour list object:

Number of regions: 773

Number of nonzero links: 4440

Percentage nonzero weights: 0.7430602

Average number of links: 5.743855

Link number distribution:

1 2 3 4 5 6 7 8 9 10 11 12 14

2 14 57 125 182 140 122 72 41 12 4 1 1

2 least connected regions:

138 560 with 1 link

1 most connected region:

508 with 14 links

Weights style: B

Weights constants summary:

n nn S0 S1 S2

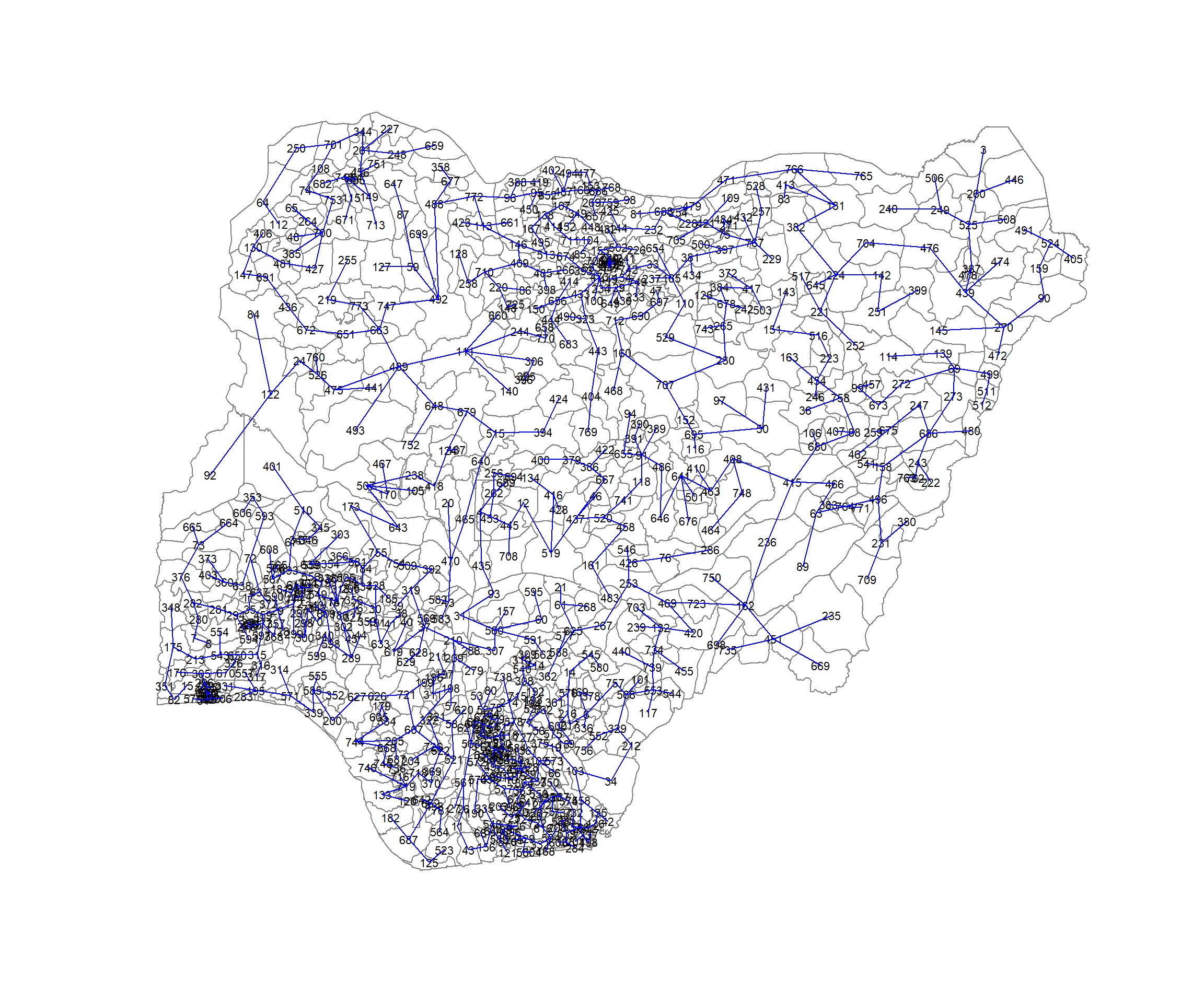

B 773 597529 2109.869 2666.155 28614.43Computing minimum spanning tree

The minimum spanning tree is computed by mean of the mstree() of spdep package as shown in the code chunk below.

nga_wp.mst <- mstree(nga_wp.w)class(nga_wp.mst)[1] "mst" "matrix"dim(nga_wp.mst)[1] 772 3head(nga_wp.mst) [,1] [,2] [,3]

[1,] 296 49 0.2877620

[2,] 296 325 0.3152240

[3,] 325 622 0.1296126

[4,] 622 514 0.1773219

[5,] 514 461 0.1267727

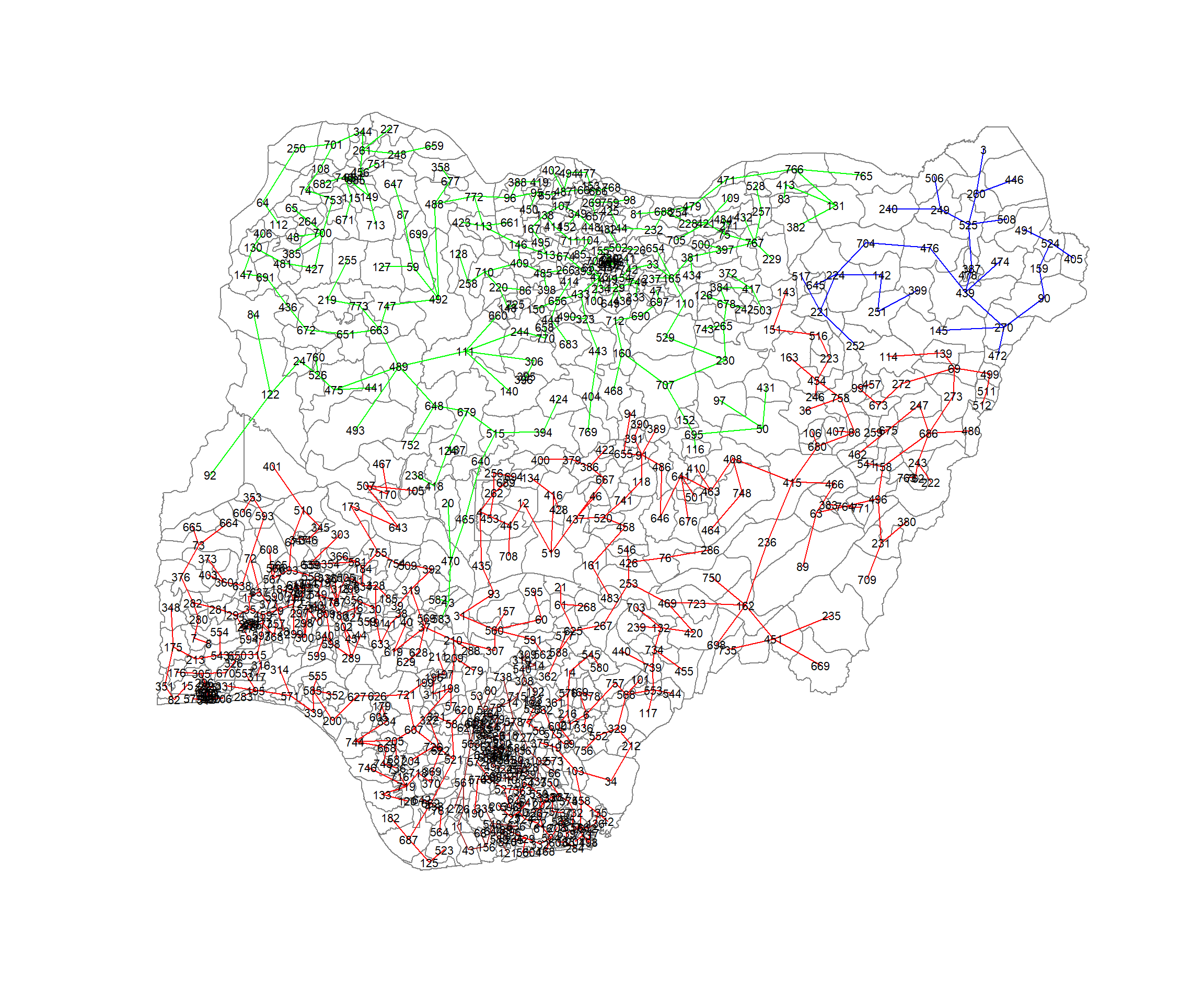

[6,] 514 681 0.1891041The plot method for the MST includes a way to show the observation numbers of the nodes in addition to the edge. As before, we plot this together with the LGA boundaries. We can see how the initial neighbour list is simplified to just one edge connecting each of the nodes, while passing through all the nodes.

plot(nga_wp_sp_v2, border=gray(.5))

plot.mst(nga_wp.mst,

coordinates(nga_wp_sp_v2),

col="blue",

cex.lab=0.7,

cex.circles=0.005,

add=TRUE)

Computing spatially constrained clusters using SKATER method

The code chunk below computes the spatially constrained cluster using skater() of spdep package. The number of cuts used here is 3 as it is 1 less than the number of clusters.

clust4 <- spdep::skater(edges = nga_wp.mst[,1:2],

data = nga_wp_var_v2,

method = "euclidean",

ncuts = 3)str(clust4)List of 8

$ groups : num [1:773] 1 1 3 1 1 1 1 1 1 4 ...

$ edges.groups:List of 4

..$ :List of 3

.. ..$ node: num [1:433] 364 558 103 34 212 550 202 329 586 537 ...

.. ..$ edge: num [1:432, 1:3] 459 172 300 299 71 326 670 177 29 305 ...

.. ..$ ssw : num 208

..$ :List of 3

.. ..$ node: num [1:230] 382 131 766 471 479 688 228 421 75 767 ...

.. ..$ edge: num [1:229, 1:3] 772 747 663 113 492 488 663 651 672 146 ...

.. ..$ ssw : num 95.6

..$ :List of 3

.. ..$ node: num [1:30] 476 704 224 439 387 525 270 90 142 159 ...

.. ..$ edge: num [1:29, 1:3] 476 704 387 224 224 439 439 525 525 270 ...

.. ..$ ssw : num 19.4

..$ :List of 3

.. ..$ node: num [1:80] 337 367 727 102 617 578 23 536 188 293 ...

.. ..$ edge: num [1:79, 1:3] 563 26 601 602 561 11 43 292 156 188 ...

.. ..$ ssw : num 34.6

$ not.prune : NULL

$ candidates : int [1:4] 1 2 3 4

$ ssto : num 425

$ ssw : num [1:4] 425 388 372 358

$ crit : num [1:2] 1 Inf

$ vec.crit : num [1:773] 1 1 1 1 1 1 1 1 1 1 ...

- attr(*, "class")= chr "skater"ccs4 <- clust4$groups

ccs4 [1] 1 1 3 1 1 1 1 1 1 4 4 1 2 1 1 1 1 1 1 2 1 1 4 2 4 4 4 1 1 1 1 1 2 1 1 1 1

[38] 1 1 1 1 1 4 1 1 1 2 2 1 2 1 4 4 4 1 1 1 1 2 1 1 1 1 2 2 4 1 4 1 1 1 1 1 2

[75] 2 1 1 4 4 4 2 1 2 2 2 2 2 1 1 3 1 2 1 1 2 2 2 2 1 2 1 4 1 2 1 1 2 2 2 2 2

[112] 2 2 1 2 2 1 1 1 1 4 2 1 2 1 2 2 2 2 2 2 1 1 1 1 1 2 2 1 2 2 3 1 2 3 2 2 2

[149] 2 2 1 2 2 2 2 4 1 1 3 2 1 1 1 4 2 2 2 1 1 1 1 1 1 1 1 1 1 1 1 4 1 1 1 1 1

[186] 1 1 4 4 1 1 4 4 4 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 4 4 1 1 2 2 2 3 1

[223] 1 3 2 2 2 2 2 2 1 2 2 2 1 1 2 2 1 3 2 2 1 2 1 1 1 2 3 2 3 3 1 2 2 1 2 2 1

[260] 3 2 1 2 2 2 2 1 1 2 3 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 4 4 4 4 1 1 1

[297] 1 1 1 1 1 1 1 1 1 2 1 4 4 4 1 4 4 1 1 1 1 1 1 1 1 1 2 4 1 1 1 1 1 1 1 1 1

[334] 1 4 1 4 1 1 1 1 1 1 2 1 1 1 1 2 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 4 1 4 1 1 1

[371] 4 2 1 1 1 1 1 1 1 1 2 2 1 2 2 1 3 2 1 1 1 1 2 2 2 2 2 2 3 1 1 2 1 2 3 2 1

[408] 1 2 1 2 2 2 2 1 1 2 2 2 1 2 1 2 2 2 1 2 1 1 2 2 2 2 2 1 2 1 1 3 1 2 1 2 2

[445] 1 3 2 2 2 2 1 2 1 1 1 2 1 1 1 1 1 1 1 1 2 1 1 2 1 2 2 3 2 3 2 3 2 3 2 1 2

[482] 2 1 2 2 1 2 2 2 2 3 2 2 2 2 1 4 1 1 2 1 2 2 1 1 3 1 3 1 1 1 1 2 1 2 1 3 2

[519] 1 1 1 1 1 3 3 2 1 2 2 4 4 1 1 4 4 4 1 1 1 4 1 4 1 1 1 1 1 4 1 1 4 1 1 1 1

[556] 1 1 1 1 1 4 1 4 1 1 1 1 1 1 4 1 1 4 1 1 1 1 4 1 1 1 2 2 4 1 1 1 1 4 1 1 1

[593] 1 1 1 1 1 1 1 1 4 4 1 1 1 1 1 1 1 4 1 1 4 4 4 1 4 4 1 1 4 1 1 1 1 1 1 1 1

[630] 4 4 1 1 1 4 4 1 1 1 2 1 1 1 4 3 1 2 2 2 1 2 2 2 2 1 2 2 2 2 2 2 1 2 1 1 2

[667] 1 1 1 1 2 2 1 2 1 1 2 2 2 1 1 2 2 2 2 1 1 2 1 2 2 1 1 1 2 1 2 1 2 2 2 2 1

[704] 3 2 2 2 1 1 2 2 2 2 4 4 1 1 1 1 1 1 1 1 1 1 1 4 4 4 2 4 1 1 1 1 1 1 4 1 2

[741] 1 2 2 1 1 1 2 1 2 1 2 2 2 1 1 1 1 1 2 2 1 1 1 1 2 2 2 2 2 2 1 2 2table(ccs4)ccs4

1 2 3 4

433 230 30 80 We can now plot the pruned tree that shows the 4 clusters on top of the LGA area.

plot(nga_wp_sp_v2, border=gray(.5))

plot(clust4,

coordinates(nga_wp_sp_v2),

cex.lab=.7,

groups.colors=c("red","green","blue", "brown", "pink"),

cex.circles=0.005,

add=TRUE)

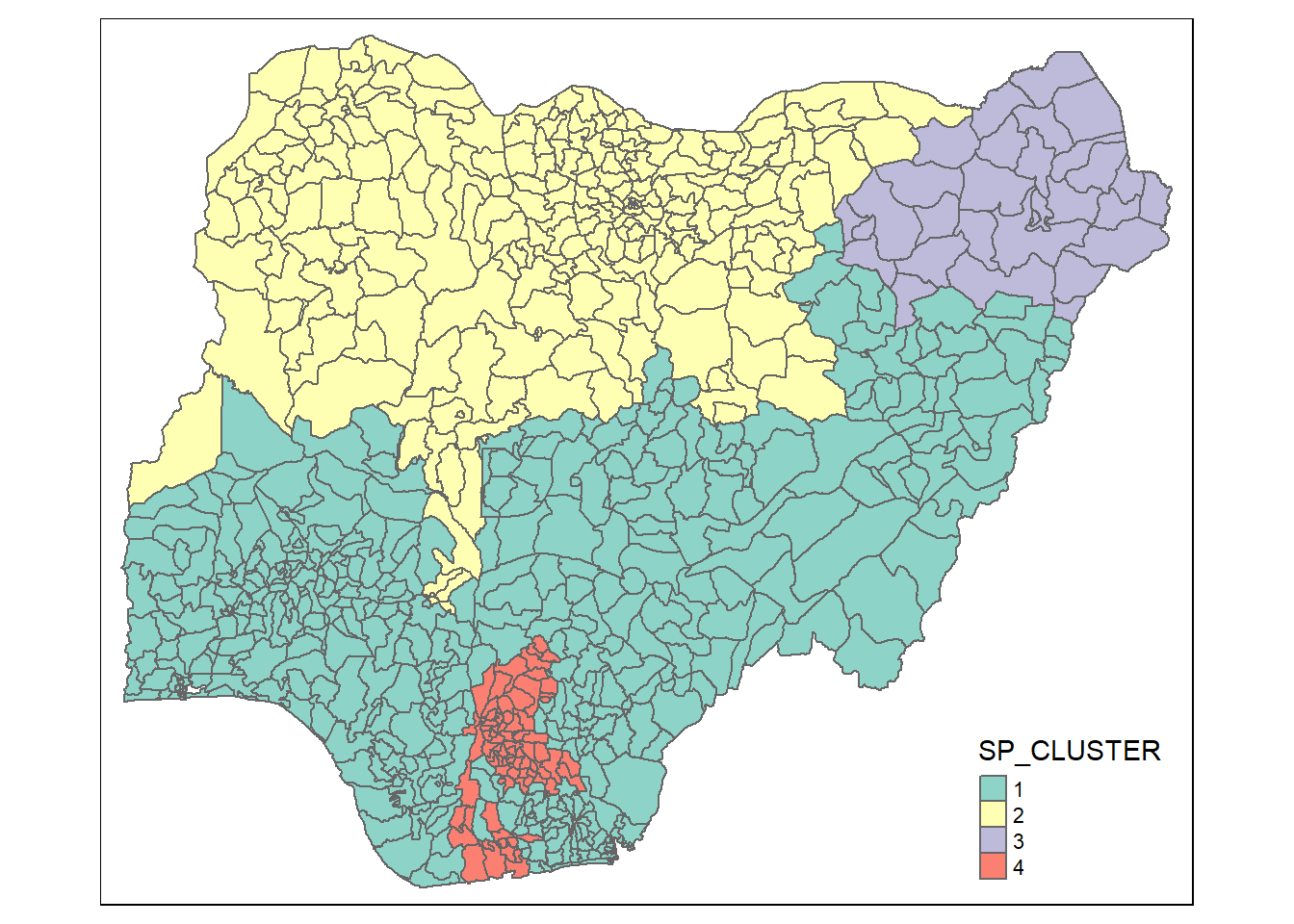

Visualising the clusters in choropleth map

The code chunk below is used to plot the newly derived clusters by using SKATER method.

groups_mat <- as.matrix(clust4$groups)

nga_wp_sf_spatialcluster <- cbind(nga_wp_v2, as.factor(groups_mat)) %>%

rename(`SP_CLUSTER`=`as.factor.groups_mat.`)

qtm(nga_wp_sf_spatialcluster, "SP_CLUSTER")

Spatially Constrained Hierarchical Clustering

Before we can perform spatially constrained hierarchical clustering, a spatial distance matrix will be derived by using st_distance() of sf package.

dist <- st_distance(nga_wp_derived, nga_wp_derived)

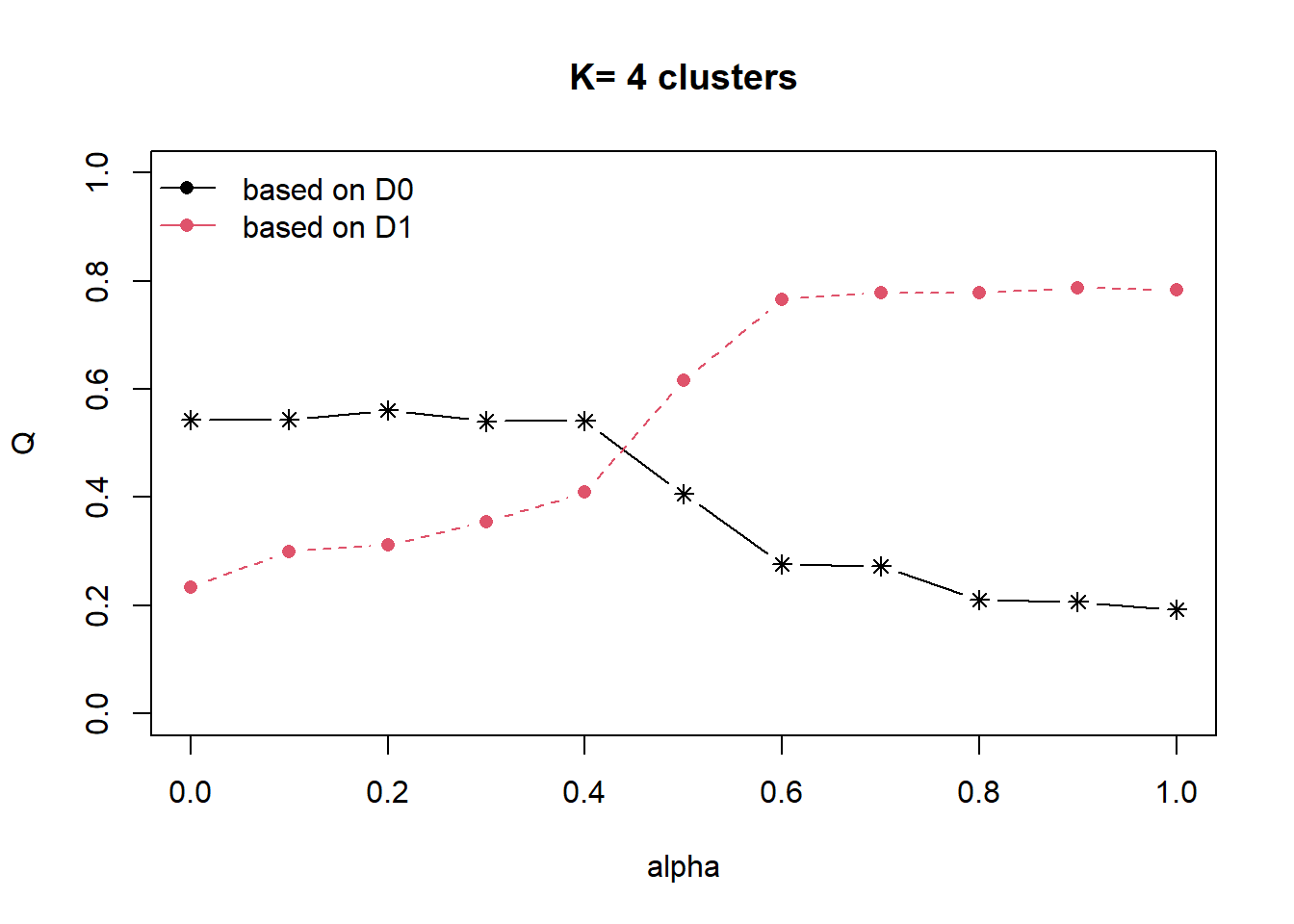

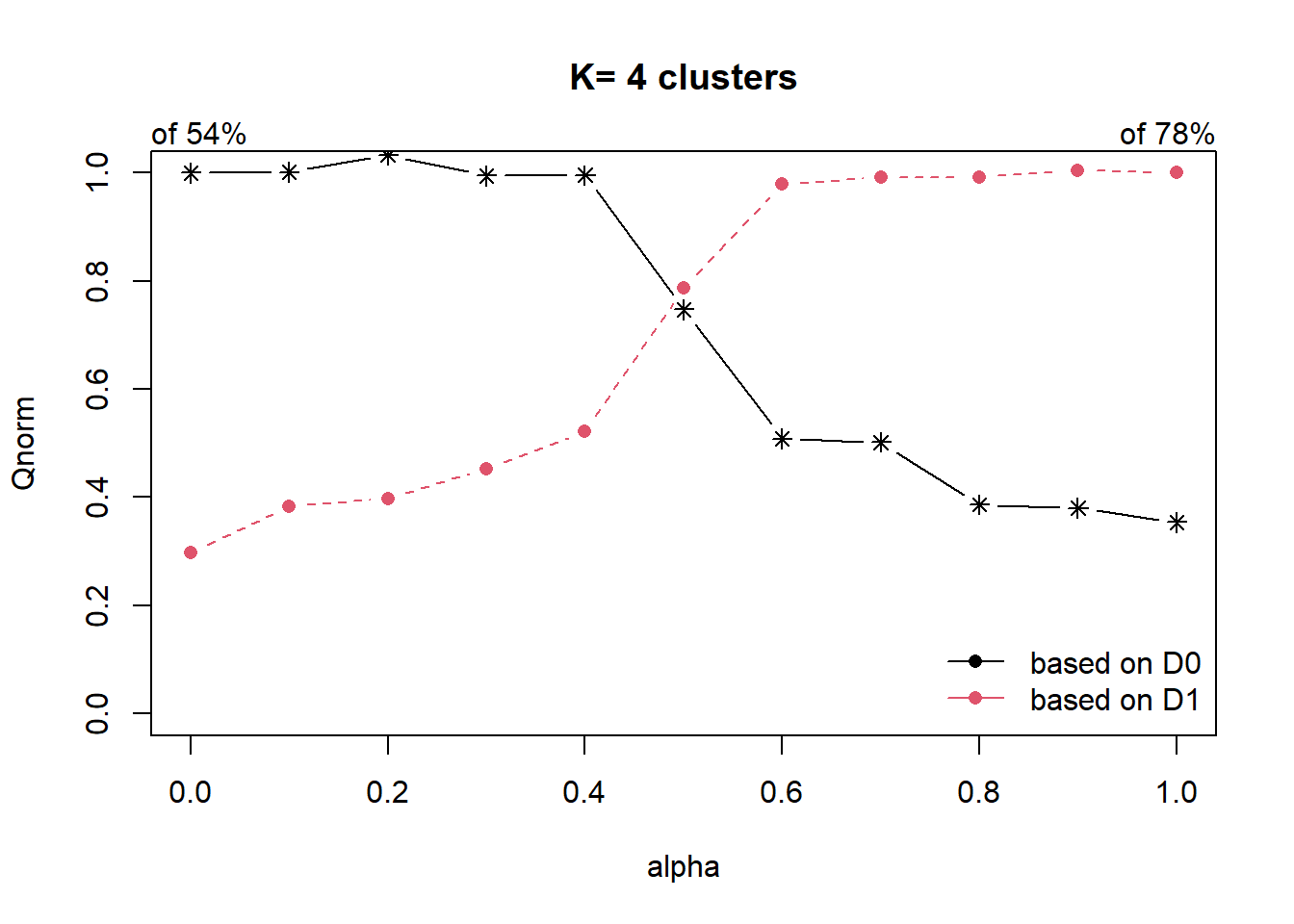

distmat <- as.dist(dist)Next, choicealpha() of ClustGeo package will be used to determine a suitable value for the mixing parameter alpha as shown in the code chunk below.

cr <- choicealpha(proxmat, distmat, range.alpha = seq(0, 1, 0.1), K=4, graph = TRUE)

With reference to the graphs above, alpha = 0.5 will be used as shown in the code chunk below.

clustG <- hclustgeo(proxmat, distmat, alpha = 0.5)groups <- as.factor(cutree(clustG, k=4))nga_wp_sf_Gcluster <- cbind(nga_wp_derived, as.matrix(groups)) %>%

rename(`CLUSTER` = `as.matrix.groups.`)We can now plot the map of the newly delineated spatially constrained clusters.

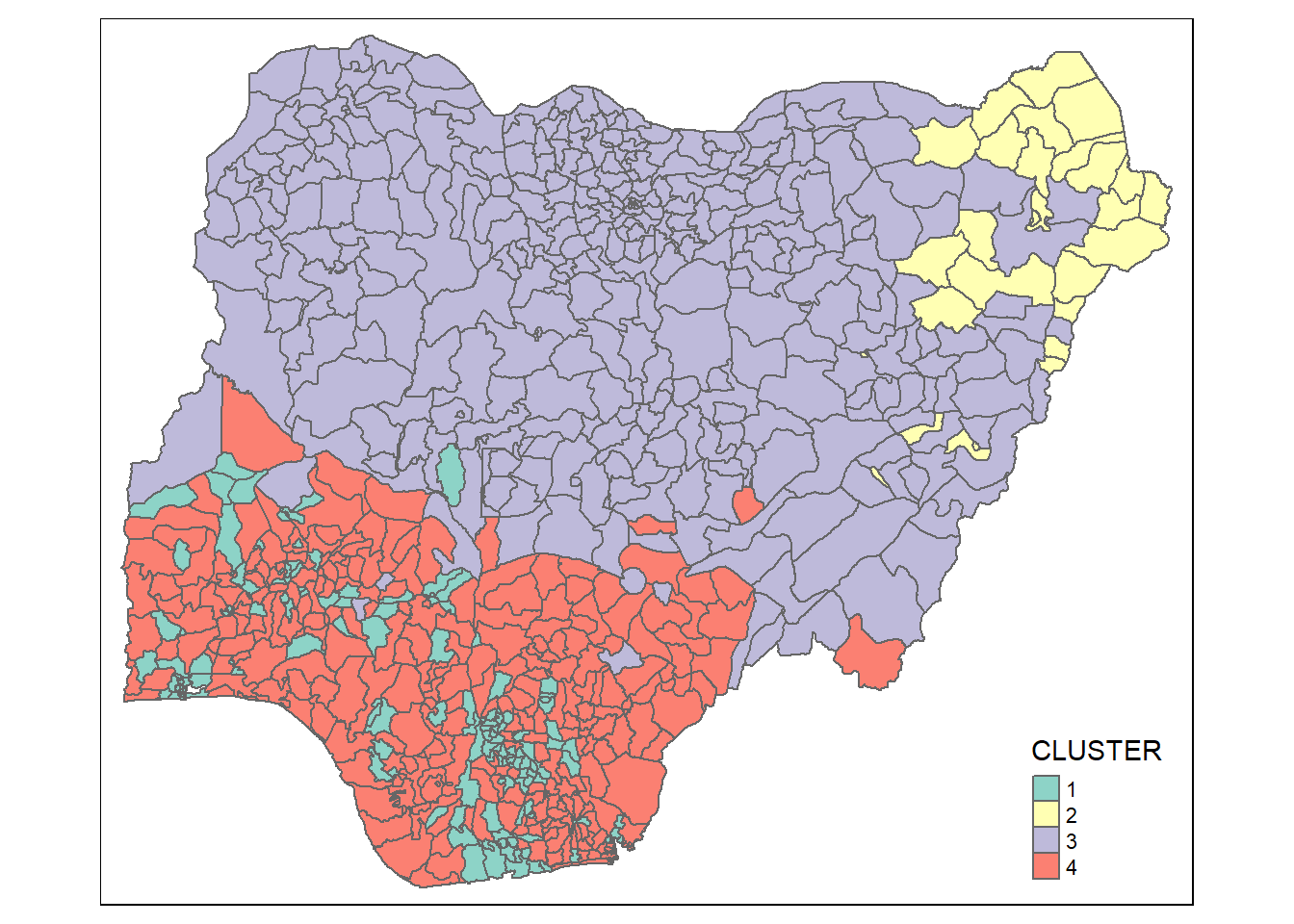

qtm(nga_wp_sf_Gcluster, "CLUSTER")

Visual Interpretation of Clusters

Visualising individual clustering variable

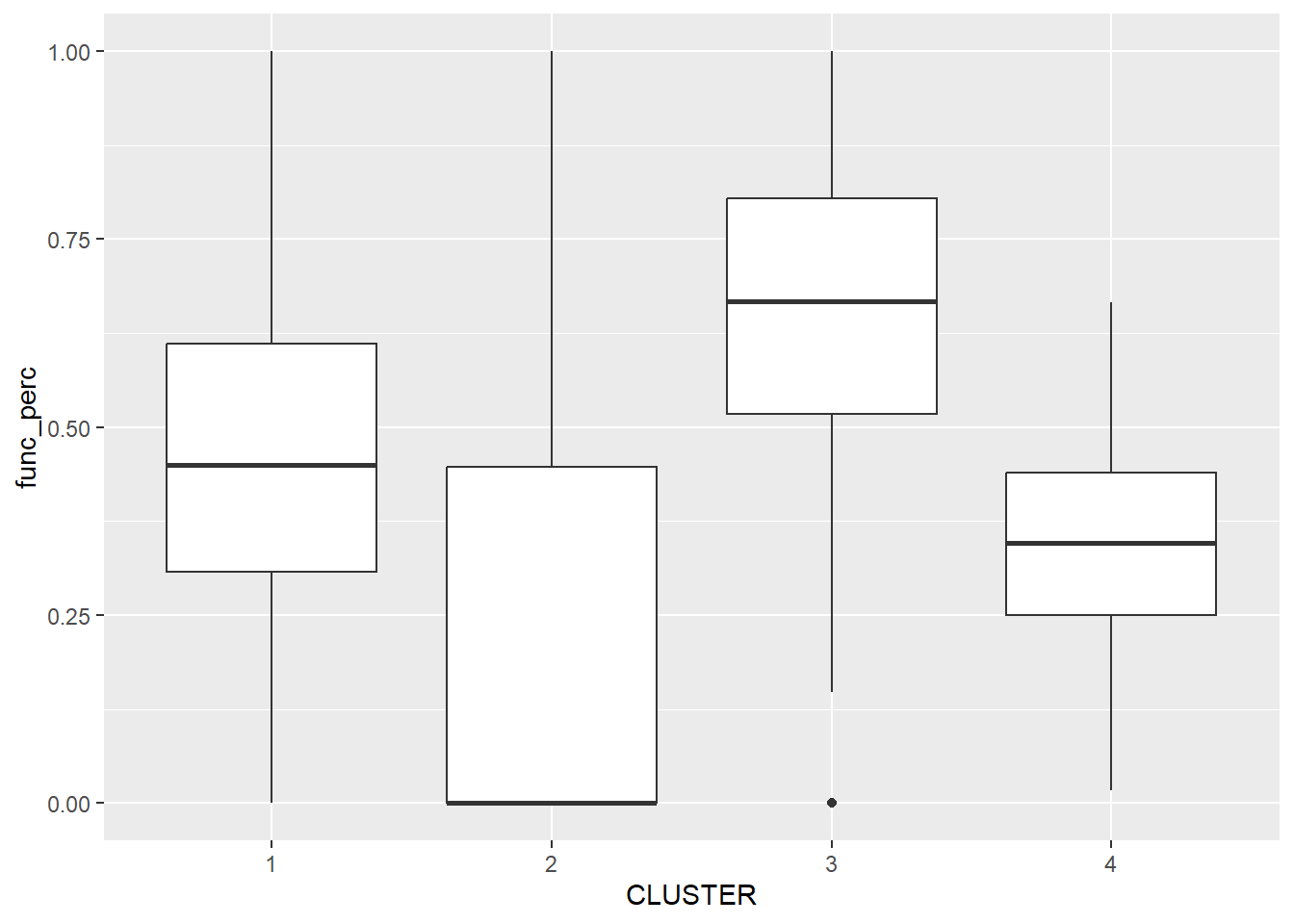

The code chunk below is used to reveal the distribution of a clustering variable (i.e func_perc) by cluster.

ggplot(data = nga_wp_sf_Gcluster,

aes(x = CLUSTER, y = func_perc)) +

geom_boxplot()

The boxplot reveals Cluster 3 displays the highest mean percentage of functional water points. This is followed by Cluster 1, 4 and 2.

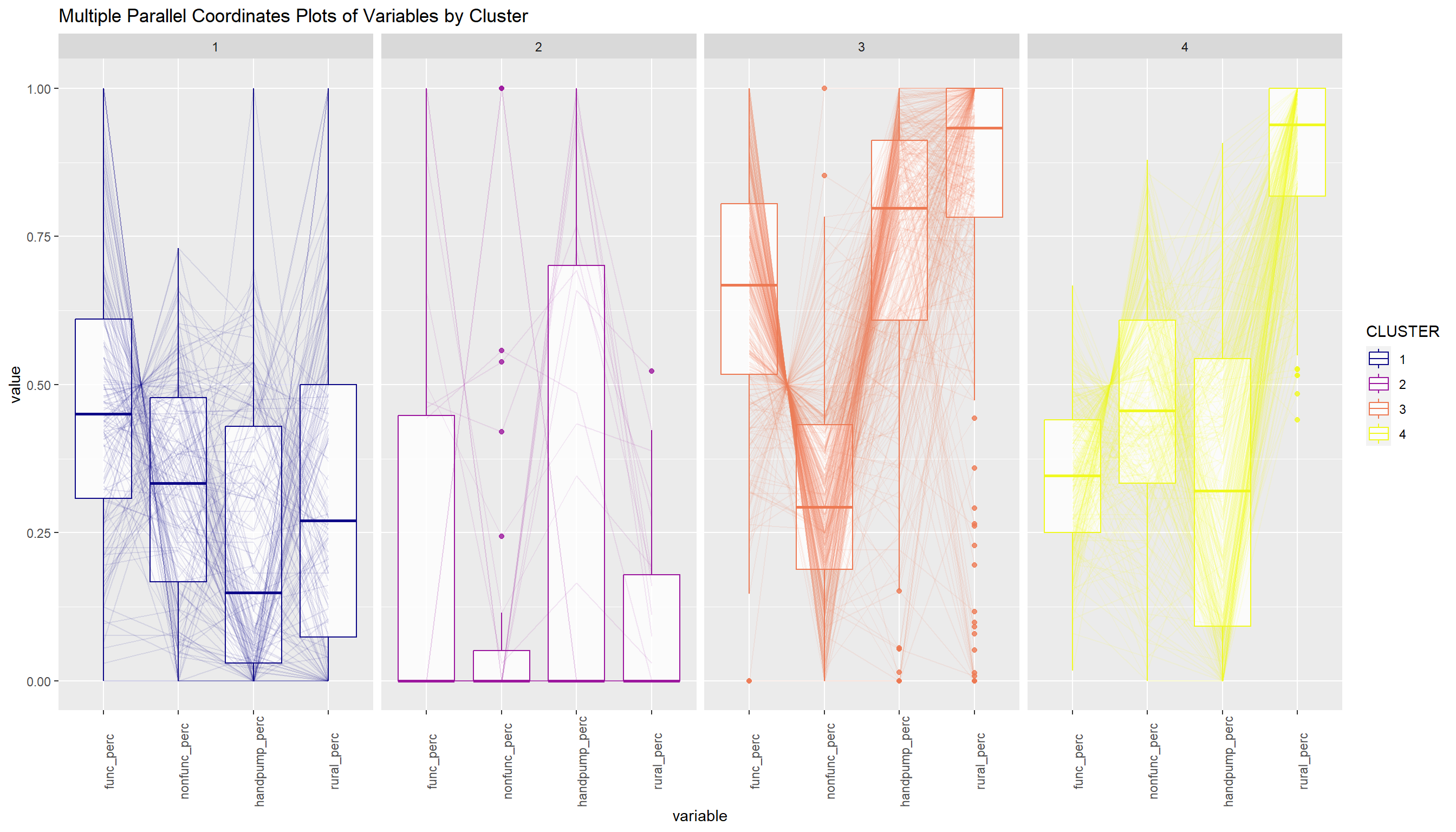

Multivariate Visualisation

The code chunk below uses ggparcoord() of GGally package to display a parallel coordinate plot that can be used to reveal clustering variables by cluster very effectively.

ggparcoord(data = nga_wp_sf_Gcluster,

columns = c(4:6, 10),

scale = "globalminmax",

alphaLines = 0.1,

boxplot = TRUE,

title = "Multiple Parallel Coordinates Plots of Variables by Cluster",

groupColumn = "CLUSTER") +

facet_grid(~ CLUSTER) +

theme(axis.text.x = element_text(angle = 90)) +

scale_color_viridis(option = "C", discrete=TRUE)

From the above, we can infer that Cluster 3 consists of LGAs having higher average of func_perc, handpump_perc and rural_perc. This may suggest this Cluster consists of LGAs which are predominantly specialised in farming with abundant functional water points.